Automatic vulnerability detection.

Adversarial Threat Detector

Adversarial Threat Detector makes AI development Secure.

In recent years, deep learning technology has been developing, and various systems using deep learning are spreading in our society, such as face recognition, security cameras (anomaly detection), and ADAS (Advanced Driver-Assistance Systems).

On the other hand, there are many attacks that exploit vulnerabilities in deep learning algorithms. For example, the Evasion Attacks are an attack that causes the target classifier to misclassify the Adversarial Examples into the class intended by the adversary. the Exfiltration Attacks are an attack that steals the parameters and train data of a target classifier. If your system is vulnerable to these attacks, it can lead to serious incidents such as face recognition being breached, allowing unauthorized intrusion, or information leakage due to inference of train data.

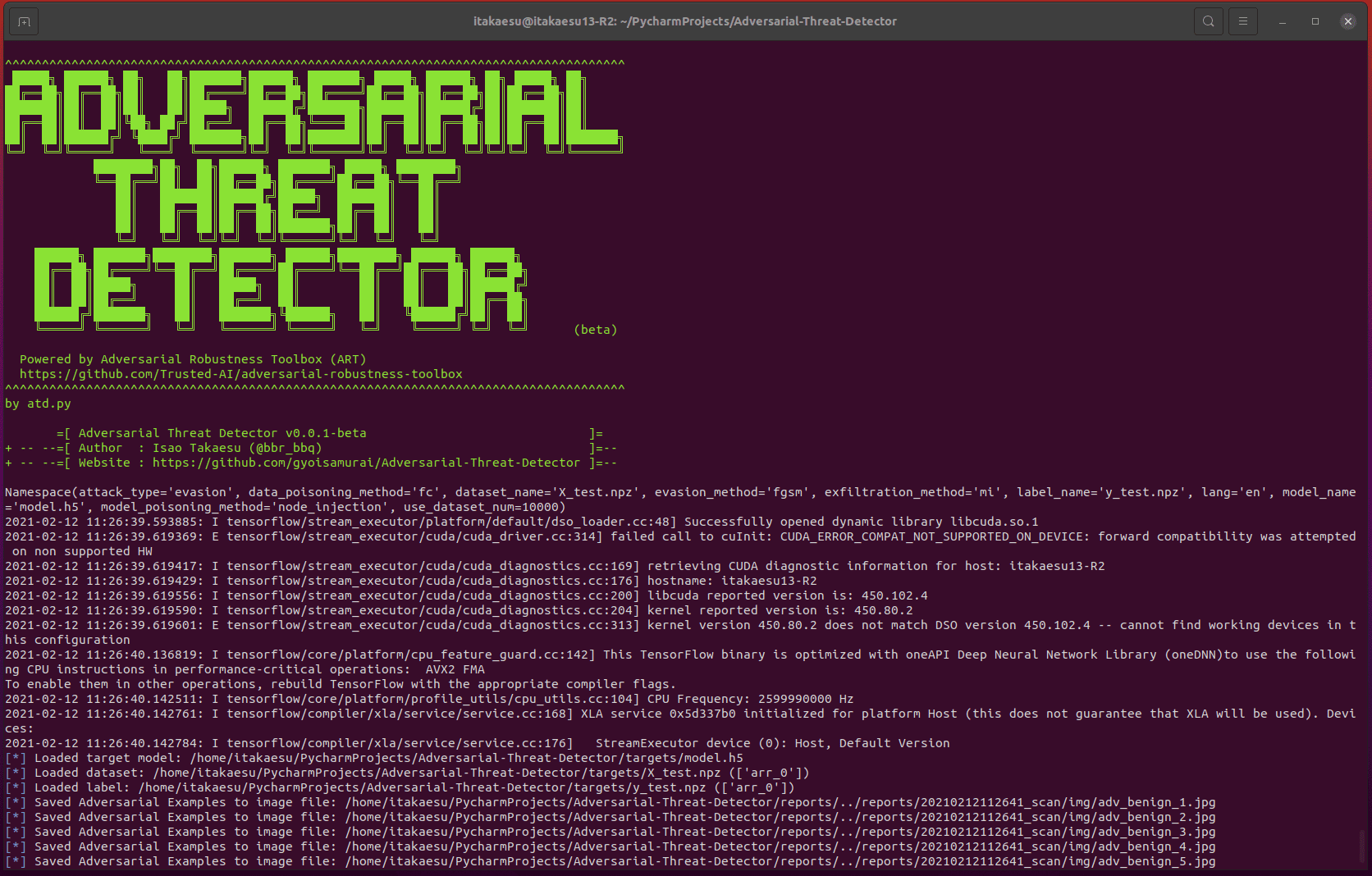

So we released a vulnerability scanner called “Adversarial Threat Detector” (a.k.a. ATD), which automatically detects vulnerabilities in deep learning-based classifiers.

ATD contributes to the security of your classifier by executing the four cycles of “Detecting vulnerabilities (Scanning & Detection)”, “Understanding vulnerabilities (Understanding)”, “Fixing vulnerabilities (Fix)”, and “Check fixed vulnerabilities (Re-Scanning)”.

ATD is following the Adversarial Threat Matrix, which summarizes threats to machine learning systems. And currently, ATD uses the Adversarial Robustness Toolbox (ART), a security library for machine learning, as its core engine. Currently, ATD is a beta version, but we will release new functions once a month, so please check our release information.

ATD’s secure cycle.

1. Detecting vulnerabilities(Scanning & Detection)

ATD automatically executes a variety of attacks against the classifier and detects vulnerabilities.

2. Understanding vulnerabilities (Understanding)

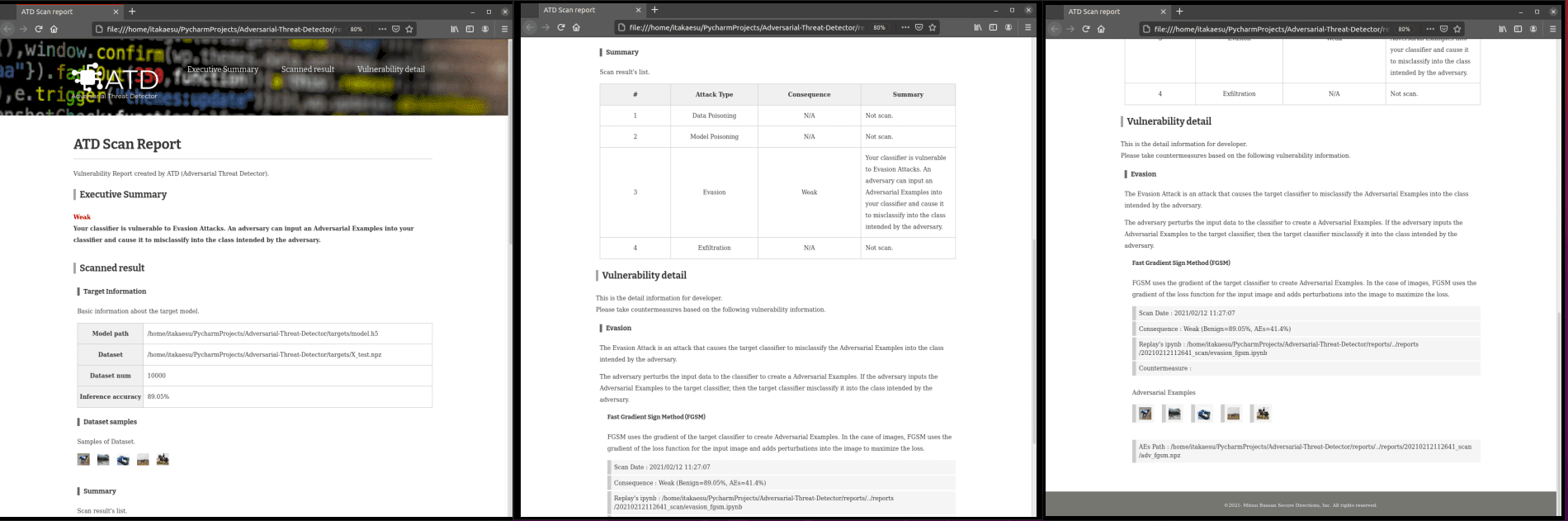

When a vulnerability is detected, ATD will generate a countermeasure report (HTML style) and a replay environment (ipynb style) of the vulnerabilities. Developers can understand the vulnerabilities by referring to the countermeasure report and the replay environment.

- Countermeasure report (HTML style)

Developers can fix the vulnerabilities by referring to the vulnerability overview and countermeasures.

Sample report is here.

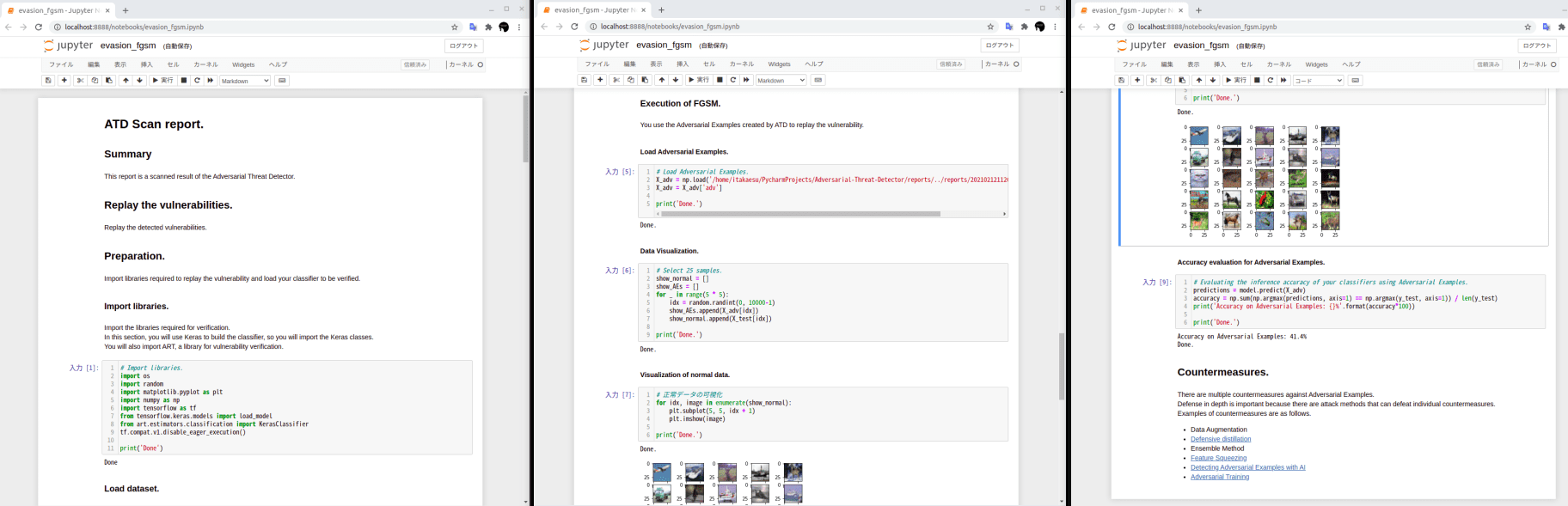

- Vulnerabilities Replay environment (ipynb style)

By opening the ipynb automatically generated by ATD in Jupyter Notebook so on, developers can replay the attack against the classifier. Developers can understand the vulnerabilities.

The sample notebook is here.

3. Fixing vulnerabilities (Fix)

ATD automatically fixes detected vulnerabilities.

* This feature will be supported in the next release.

4. Check fixed vulnerabilities (Re-Scanning)

The ATD checks the fixed vulnerabilities of the fixed classifier.

* This feature will be supported in the next release.

Install & Use

Copyright (c) 2021 Adversarial Threat Detector