Google Formally Integrates Kubernetes Engine and GPU Services

Recently, Google Cloud Services has enhanced many features related to deep learning and machine learning applications. It first expanded the hardware specifications of the virtual host, and introduced ultra men specification virtual machines with large memory, allowing users to perform high-performance computing while also implementing a public cloud platform. The GPU and TPU will also launch shortly. Businesses can consume GPU and TPU resources at a lower price than on-demand services. This time, Google announced that public cloud GPU services are now officially integrated with Kubernetes Engine (GKE).

Currently, there are three options for GCP’s GPU hardware, from the entry-level K80 price to mid-level P100 and high-end V100, allowing users to make choices as needed. For users who want to use new features, Google now also offers a $300 free trial.

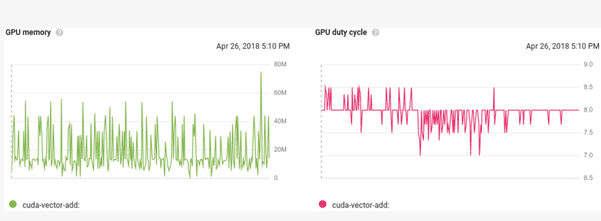

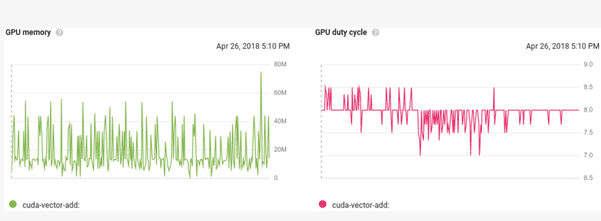

After this release, the company’s container applications running in the GKE environment will be able to use GPU services and execute CUDA workloads. Google said: “By using GPUs in Kubernetes Engine for your CUDA workloads, you benefit from the massive processing power of GPUs whenever you need, without having to manage hardware or even VMs.” It can use with the market’s officially launched preemptive GPU service. This will reduce the cost of machine learning operations. This feature is now also integrated with the Google CloudStack Monitoring Service Stackdriver. Users can observe the current frequency of GPU resource access, availability of GPU resources, or GPU configuration.

Also, companies that use GPU services in the Kubernetes environment can also use some of the existing features of the Google Kubernetes engine. Like node resource pooling, applications on existing Kubernetes clusters can access GPU resources. When the resiliency of enterprise applications changes, you can use the cluster expansion feature. The system can automatically extend the built-in GPU’s nodes. When no Pod in the infrastructure needs to access GPU resources, the system automatically shuts down the expansion node. GKE also ensures that the pods on the nodes are all pods that need access to GPU resources to avoid deploying pods that do not have GPU requirements to these nodes. System administrators can use the resource quota feature to limit the GPU resources that each user can access when multiple teams share large clusters.

This feature is now also integrated with Google’s cloud monitoring service Stackdriver. Users can observe the current frequency of GPU resource access, availability of GPU resources, or GPU configuration.

Source, Image: googleblog