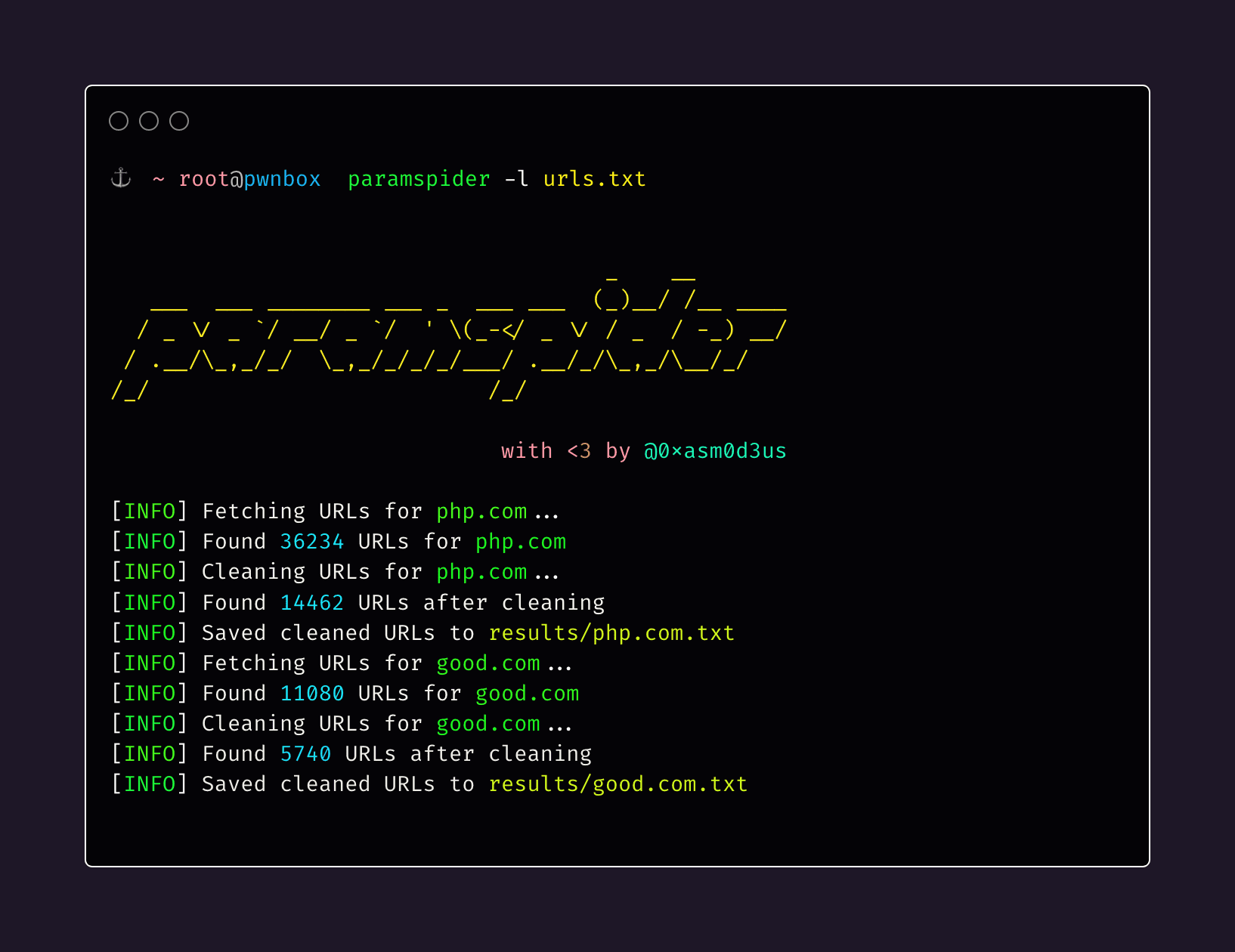

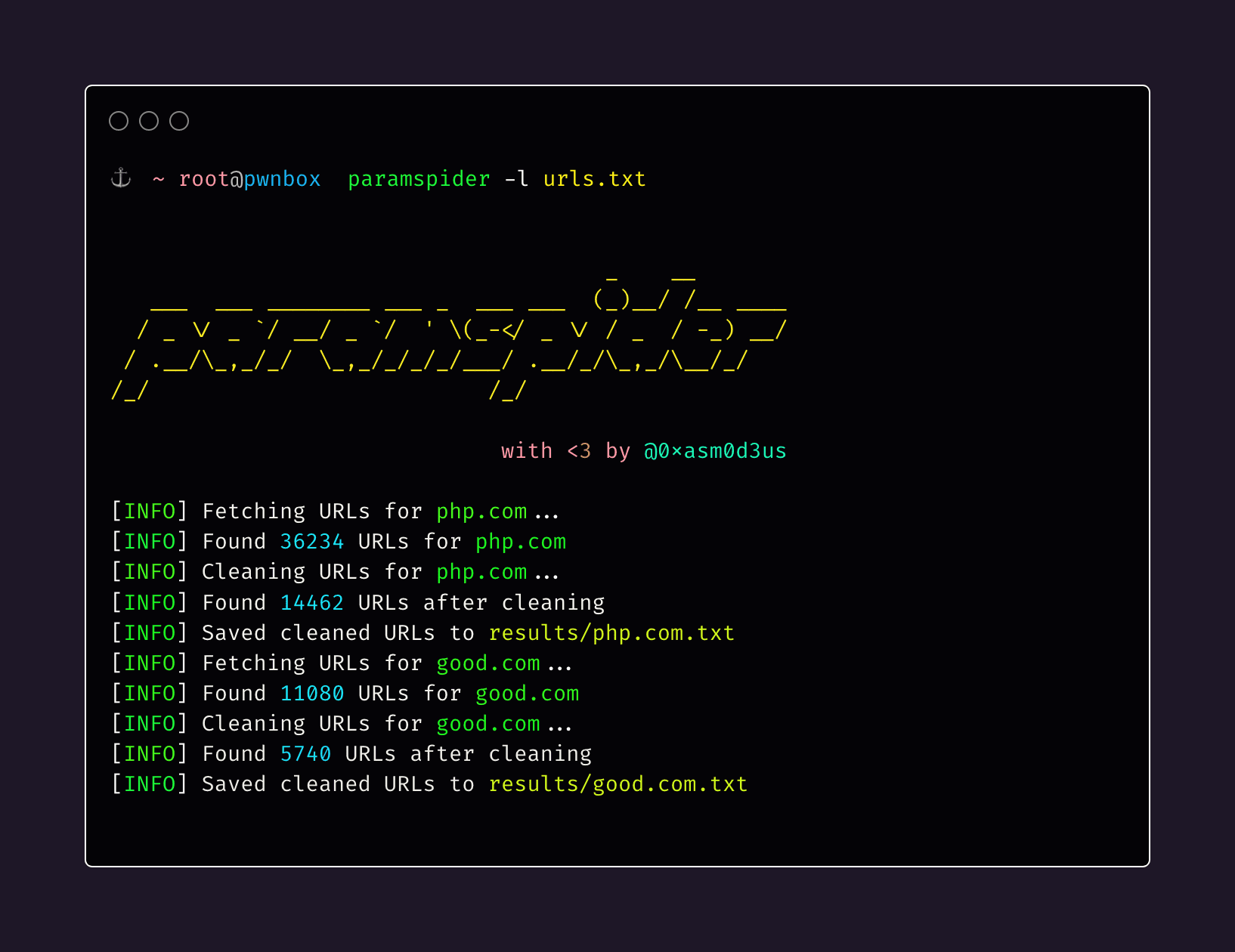

ParamSpider v1.0 releases: Mining URLs from dark corners of Web Archives for bug hunting/fuzzing/further probing

ParamSpider: Parameter miner for humans

ParamSpider allows you to fetch URLs related to any domain or a list of domains from Wayback archives. It filters out “boring” URLs, allowing you to focus on the ones that matter the most.

Key Features :

- Finds parameters from web archives of the entered domain.

- Gives support to exclude urls with specific extensions.

- Saves the output result in a nice and clean manner.

- It mines the parameters from web archives (without interacting with the target host)

Install

$ git clone https://github.com/devanshbatham/ParamSpider

$ cd ParamSpider

$ pip install -r requirements.txt

Use

1 – For a simple scan [without the –exclude parameter]

$ python3 paramspider.py –domain hackerone.com

-> Output ex : https://hackerone.com/test.php?q=FUZZ

2 – For excluding urls with specific extensions

$ python3 paramspider.py –domain hackerone.com –exclude php,jpg,svg

3 – For finding nested parameters

$ python3 paramspider.py –domain hackerone.com –level high

-> Output ex : https://hackerone.com/test.php?p=test&q=FUZZ

4 – Saving the results

$ python3 paramspider.py –domain hackerone.com –exclude php,jpg –output hackerone.txt

Example

$ python3 paramspider.py –domain bugcrowd.com –exclude woff,css,js,png,svg,php,jpg –output bugcrowd.txt

Copyright (C) 2020 0xAsm0d3us