sigurlfind3r: passive reconnaissance tool for known URLs discovery

sigurlfind3r

A passive reconnaissance tool for known URLs discovery – it gathers a list of URLs passively using various online sources.

Features

- Collect known URLs:

- Fetches from AlienVault’s OTX, Common Crawl, URLScan, Github, and the Wayback Machine.

- Fetches disallowed paths from robots.txt found on your target domain and snapshotted by the Wayback Machine.

- Reduce noise:

- Regex filter URLs.

- Removes duplicate pages in the sense of URL patterns that are probably repetitive and points to the same web template.

- Output to stdout for piping or save to file.

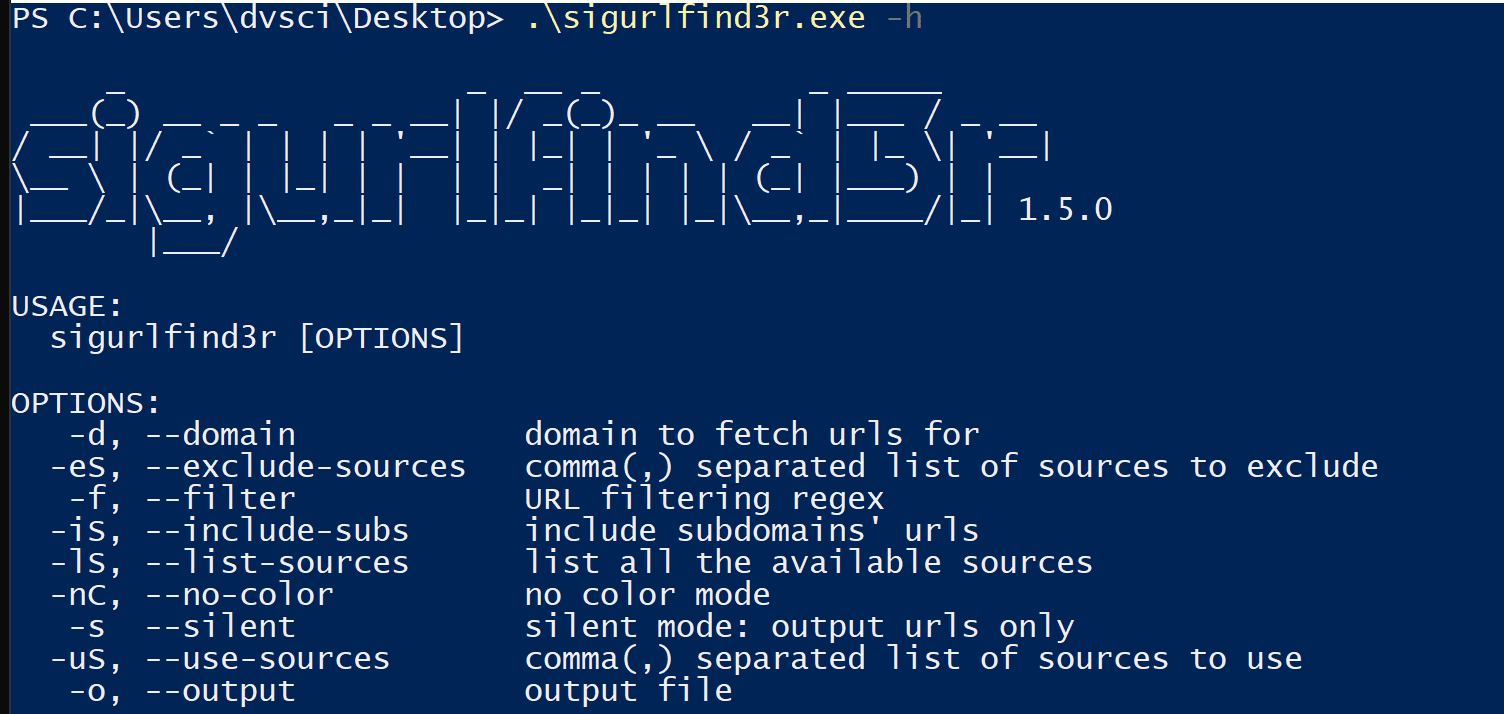

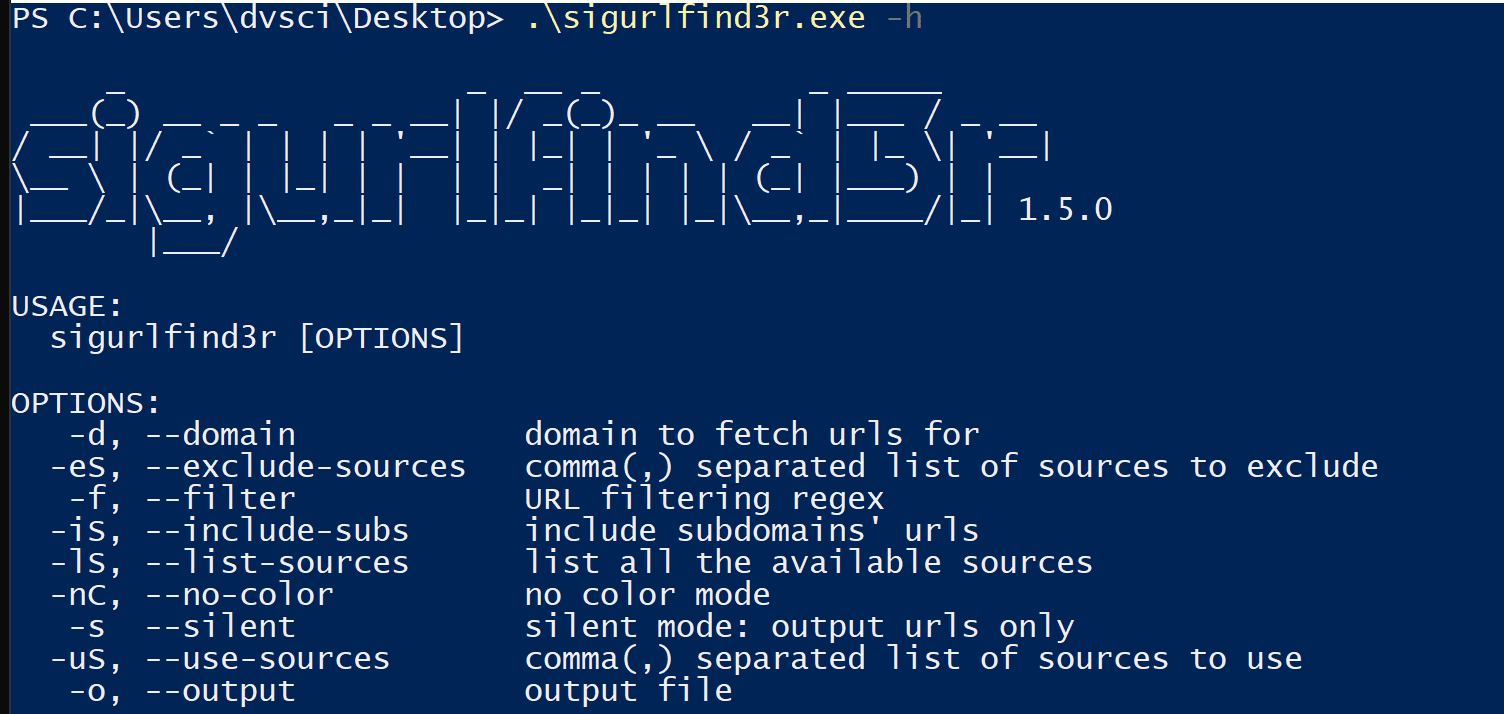

Use

Examples

Basic

sigurlfind3r -d tesla.com

Regex filter URLs

sigurlfind3r -d tesla.com -f “.(jpg|jpeg|gif|png|ico|css|eot|tif|tiff|ttf|woff|woff2)“

Include Subdomains’ URLs

sigurlfind3r -d tesla.com -iS

Installation

Copyright (c) 2021 Signed Security