Afuzz – An automated web path fuzzing tool

Afuzz is an automated web path fuzzing tool for the Bug Bounty projects.

Features

- Afuzz automatically detects the development language used by the website and generates extensions according to the language

- Uses blacklist to filter invalid pages

- Uses a whitelist to find content that bug bounty hunters are interested in on the page

- filters random content on the page

- judges 404 error pages in multiple ways

- perform statistical analysis on the results after scanning to obtain the final result.

- support HTTP2

Install

git clone https://github.com/rapiddns/Afuzz.git

cd Afuzz

python setup.py install

Wordlists (IMPORTANT)

Summary:

- Wordlist is a text file, each line is a path.

- About extensions, Afuzz replaces the

%EXT%keyword with extensions from -e flag.If no flag -e, the default is used. - Generate a dictionary based on domain names. Afuzz replaces %subdomain% with host, %rootdomain% with a root domain, %sub% with a subdomain, and %domain% with the domain. And generated according to %ext%

Examples:

- Normal extensions

index.%EXT%

Passing asp and aspx extensions will generate the following dictionary:

index

index.asp

index.aspx

- host

%subdomain%.%ext%

%sub%.bak

%domain%.zip

%rootdomain%.zip

Passing https://test-www.hackerone.com and php extension will generate the following dictionary:

test-www.hackerone.com.php

test-www.zip

test.zip

www.zip

testwww.zip

hackerone.zip

hackerone.com.zip

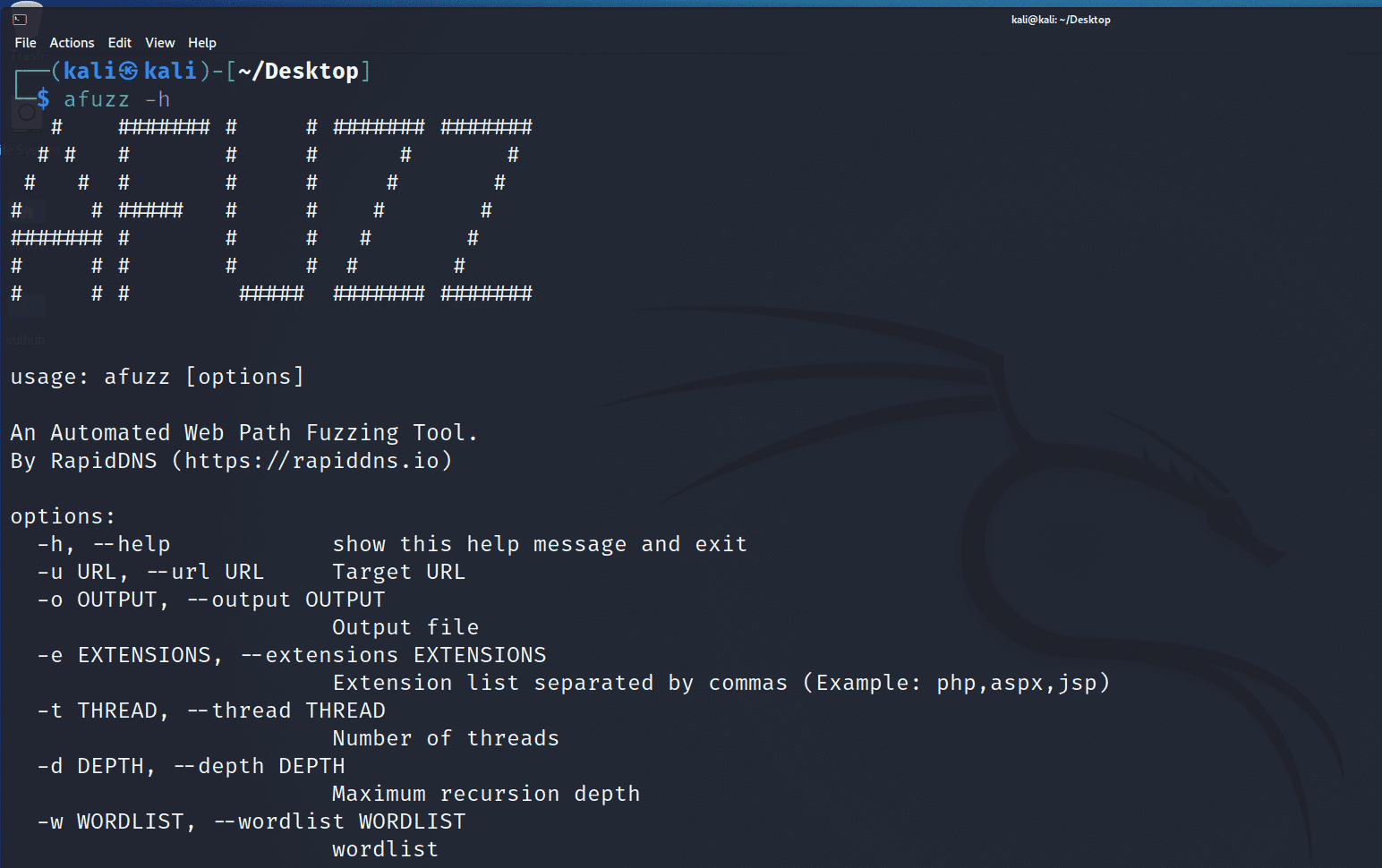

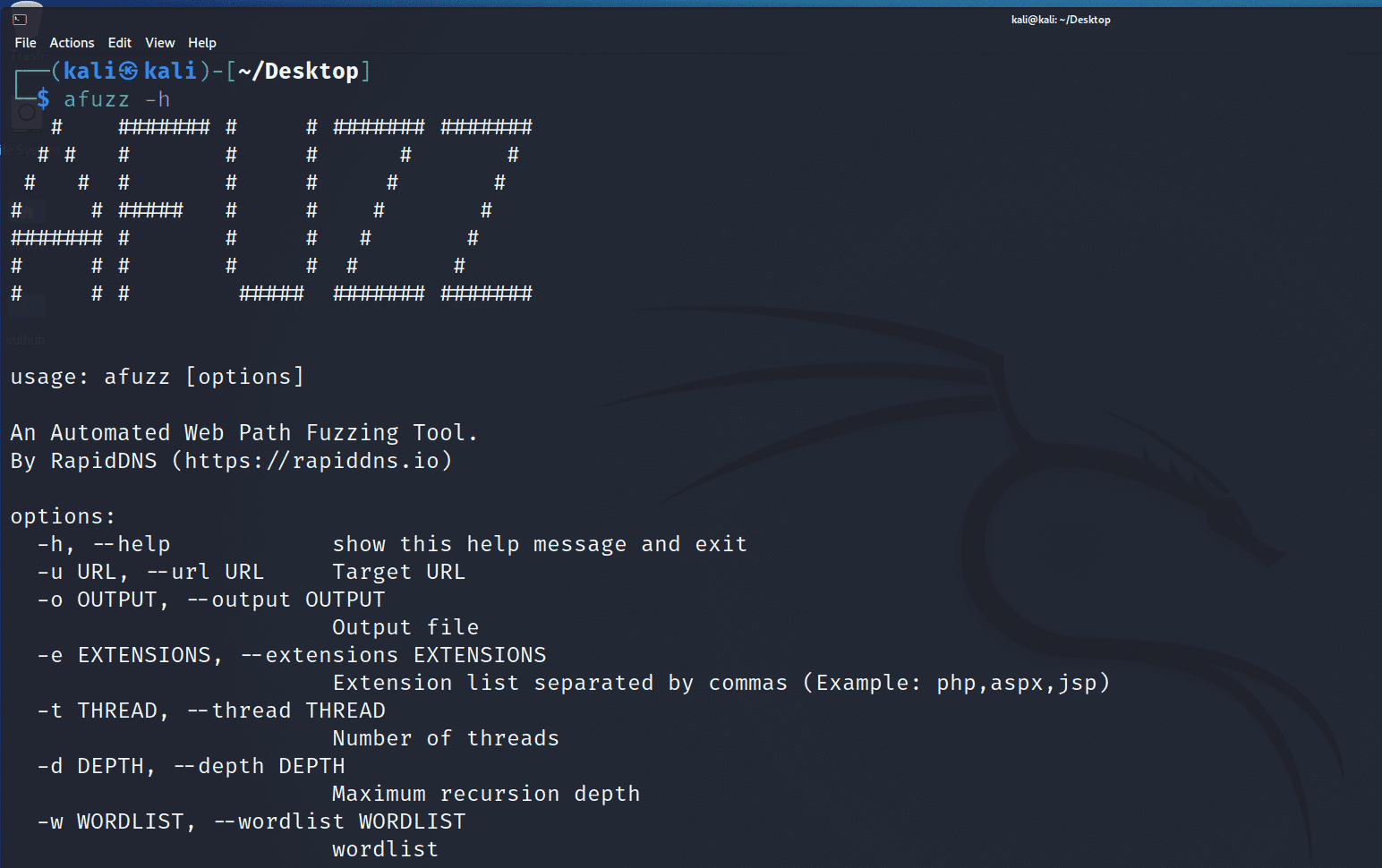

Use

Some examples of how to use Afuzz – are the most common arguments. If you need all, just use the -h argument.

Simple usage

afuzz -u https://target

afuzz -e php,html,js,json -u https://target

afuzz -e php,html,js -u https://target -d 3

Threads

The thread number (-t | –threads) reflects the number of separated brute force processes. And so the bigger the thread number is, the faster afuzz runs. By default, the number of threads is 10, but you can increase it if you want to speed up the progress.

In spite of that, the speed still depends a lot on the response time of the server. And as a warning, we advise you to keep the thread number not too big because it can cause DoS.

afuzz -e aspx,jsp,php,htm,js,bak,zip,txt,xml -u https://target -t 50

Blacklist

The blacklist.txt and bad_string.txt files in the /db directory are blacklists, which can filter some pages

The blacklist.txt file is the same as dirsearch.

The bad_stirng.txt file is a text file, one per line. The format is position==content. With == as the separator, the position has the following options: header, body, regex, title

Language detection

The language.txt is the detection language rule, the format is consistent with bad_string.txt. Development language detection for website usage.

Source: https://github.com/RapidDNS/