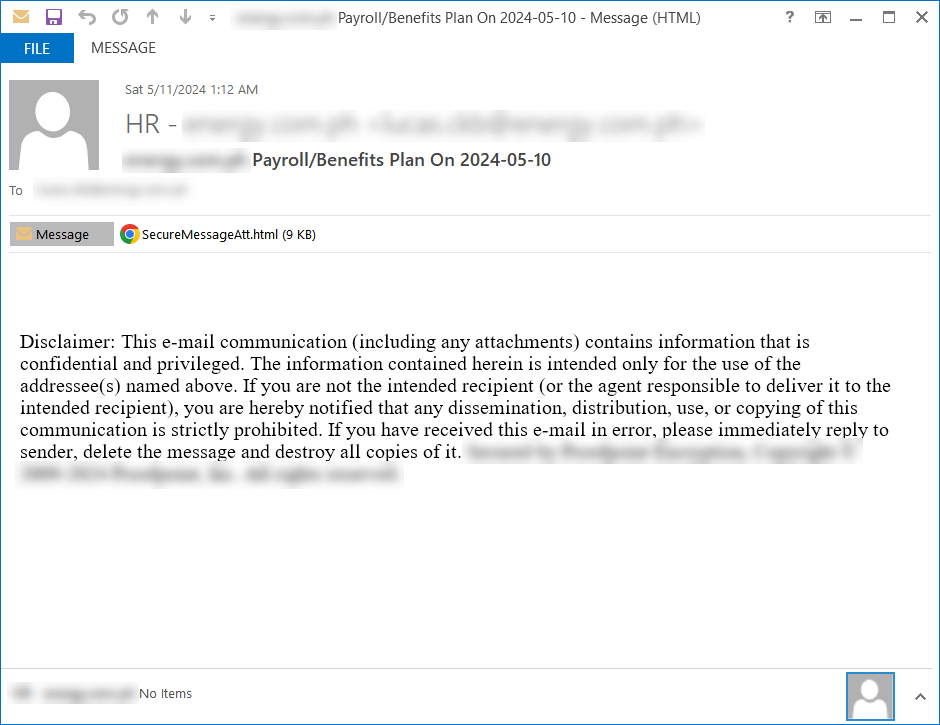

Phishing email mimicking HR notification

In a concerning trend, cybercriminals are increasingly leveraging Large Language Models (LLMs) like ChatGPT to craft sophisticated and deceptive attacks, according to a recent report from Symantec.

While LLMs like ChatGPT have proven to be valuable tools for various applications, they’re also being weaponized by malicious actors. Symantec’s research reveals a surge in malware campaigns employing LLM-generated code to deliver harmful payloads and deceive victims.

LLMs are particularly adept at generating human-like text, making phishing emails more convincing than ever. Cybercriminals can effortlessly create personalized messages designed to trick recipients into clicking on malicious links or opening infected attachments.

Symantec’s analysis has uncovered numerous malware campaigns utilizing LLMs. These campaigns often start with phishing emails containing code designed to download and execute various payloads. The report highlights the deployment of notorious malware strains such as Rhadamanthys, NetSupport, CleanUpLoader (also known as Broomstick or Oyster), ModiLoader (DBatLoader), LokiBot, and Dunihi (H-Worm).

One notable example involves a campaign targeting multiple sectors through phishing emails. These emails, disguised as urgent financial notices, contain password-protected ZIP files. The ZIP files include malicious .lnk files which, when executed, trigger LLM-generated PowerShell scripts. These scripts, meticulously formatted and commented, lead to the deployment of malware.

Symantec’s researchers demonstrated the ease with which similar scripts could be generated using LLMs like ChatGPT with minimal effort. The scripts’ professional formatting and accurate grammar indicate the sophistication of these AI-generated codes.

The growing misuse of AI in cyberattacks raises ethical and security concerns. The cybersecurity community must collaborate on developing strategies to combat this emerging threat. Researchers, security vendors, and policymakers must work together to protect users and organizations from the malicious use of AI technology.

As AI continues to advance, so too will its potential for both good and harm. Cybersecurity professionals must stay ahead of the curve, continuously adapting their strategies to counter AI-powered attacks and ensure a safer digital future.

Related Posts:

- Hackers Fake ChatGPT App to Spread Windows, and Android Malware

- Facebook emphasized the surge in malware masquerading as ChatGPT

- The Dark Side of ChatGPT: Trade Secret Leaks in Samsung

- ChatGPT’s New Feature Allows Hackers to Steal Your Data

- Symantec: Many website inserted Cryptocurrency Mining Script