HTTPLoot

An automated tool which can simultaneously crawl, fill forms, trigger error/debug pages, and “loot” secrets out of the client-facing code of sites.

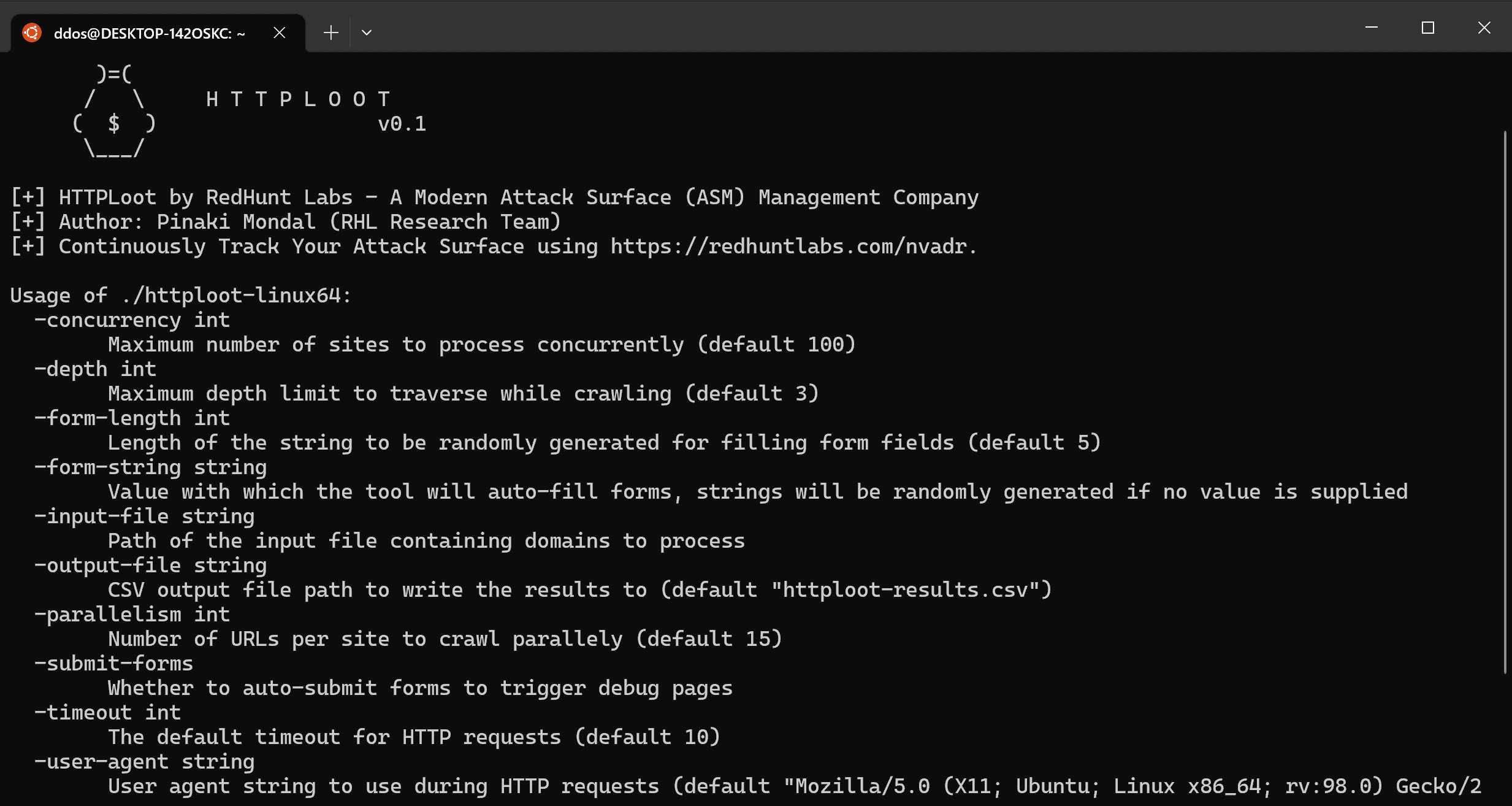

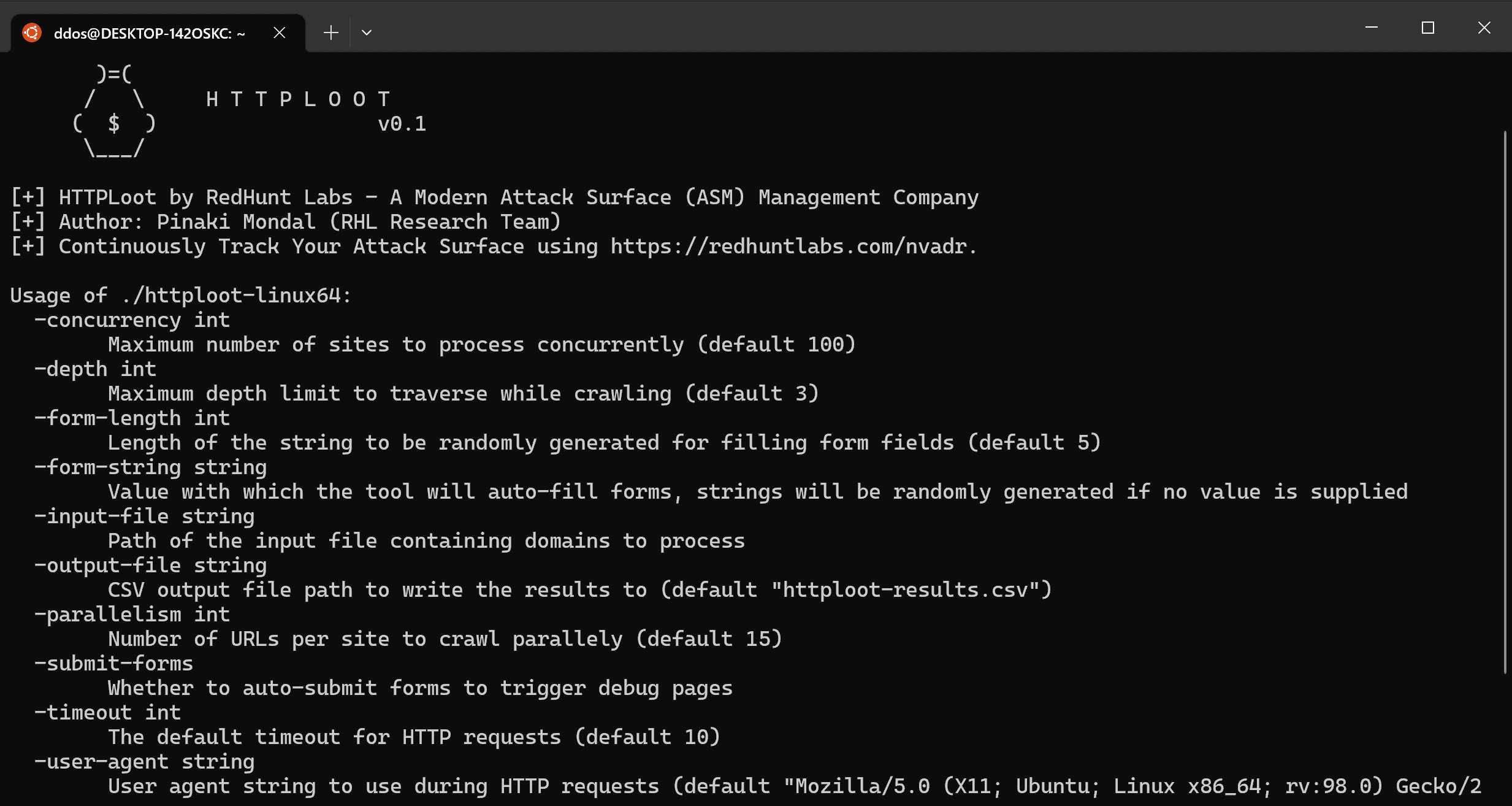

Usage

You will need two json files (lootdb.json and regexes.json) along with the binary which you can get from the repo itself. Once you have all 3 files in the same folder, you can go ahead and fire up the tool.

Concurrent scanning

There are two flags which help with the concurrent scanning:

- -concurrency: Specifies the maximum number of sites to process concurrently.

- -parallelism: Specifies the number of links per site to crawl parallelly.

Both -concurrency and -parallelism are crucial to the performance and reliability of the tool results.

Crawling

The crawl depth can be specified using the -depth flag. The integer value supplied to this is the maximum chain depth of links to crawl grabbed on a site.

An important flag -wildcard-crawl can be used to specify whether to crawl URLs outside the domain in scope.

NOTE: Using this flag might lead to infinite crawling in worst case scenarios if the crawler finds links to other domains continuously.

Filling forms

If you want the tool to scan for debug pages, you need to specify the -submit-forms argument. This will direct the tool to autosubmit forms and try to trigger error/debug pages once a tech stack has been identified successfully.

If the -submit-forms flag is enabled, you can control the string to be submitted in the form fields. The -form-string specifies the string to be submitted, while the -form-length can control the length of the string to be randomly generated which will be filled into the forms.

Network tuning

Flags like:

- -timeout – specifies the HTTP timeout of requests.

- -user-agent – specifies the user-agent to use in HTTP requests.

- -verify-ssl – specifies whether or not to verify SSL certificates.

Input/Output

The input file to read can be specified using the -input-file argument. You can specify a file path containing a list of URLs to scan with the tool. The -output-file flag can be used to specify the result output file path — which by default goes into a file called httploot-results.csv.

Download

Copyright (C) 2022 redhuntlabs