LinkedIn has been thrust into the spotlight for quietly using user-generated content to train its artificial intelligence (AI) models, a move that has sparked significant backlash among its users. The revelation came after the platform updated its privacy policy to include a section detailing the collection of user posts for generative AI training.

Policy Change Highlights Pre-Existing Data Collection

The updated policy, which states that AI may use personal information for recommendations on writing texts and posts, strongly suggests that LinkedIn was already utilizing user data for AI training prior to the official announcement. This discovery has led to accusations of a breach of trust and fueled user concerns about the platform’s data practices.

Users in the EU, Iceland, Norway, Liechtenstein, and Switzerland can breathe a sigh of relief — their data is not being used for AI training and will not be shortly.

Users Express Outrage, Opt-Out Mechanism Offered

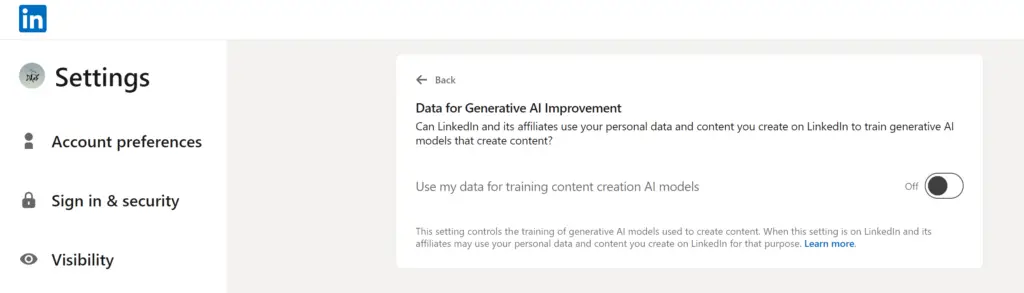

LinkedIn has quickly faced a wave of user backlash, with numerous posts condemning its actions and providing instructions on how to disable data collection. Although LinkedIn allows users to opt out of this AI training by adjusting their settings, the initial default setting to collect data has left many users feeling betrayed.

Privacy Concerns and Calls for Investigation

The human rights organization Open Rights Group (ORG) has called upon the UK regulator ICO to investigate LinkedIn’s practices, as well as those of other social networks that default to using user data for AI training. ORG argues that the current opt-out model is insufficient and advocates for a system based on explicit user consent.

LinkedIn’s Response and the Broader Industry Landscape

In response to the criticism, LinkedIn claims it minimizes the use of personal data in training sets and employs privacy-preserving technologies. However, the system also warns of the potential for inadvertent exposure of personal data during certain AI-generated responses. The Irish Data Protection Commission has confirmed that LinkedIn notified the regulator of the policy changes and the addition of an opt-out feature.

LinkedIn’s actions reflect a broader trend within the tech industry, where the increasing demand for data to train generative AI models has led many platforms to monetize or repurpose their user-generated content. This has prompted concerns about data privacy and user consent, particularly in the absence of clear and transparent communication.

The Future of Data Privacy in the Age of AI

As AI continues to advance and its reliance on large datasets grows, the debate surrounding data privacy and user consent is likely to intensify. The LinkedIn incident serves as a stark reminder of the importance of transparent data practices and the need for explicit user consent in an increasingly data-driven world.

Via: TechCrunch