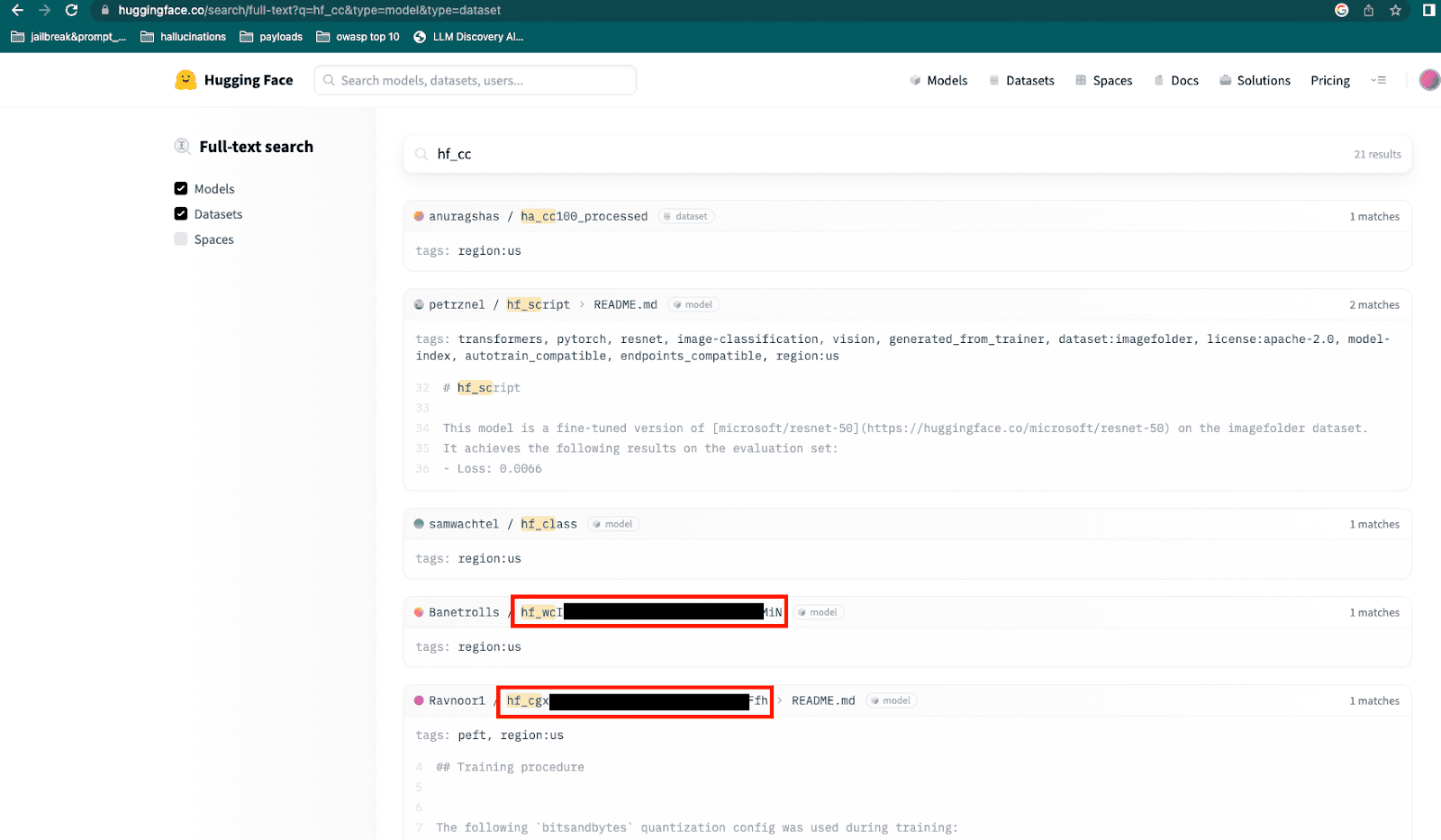

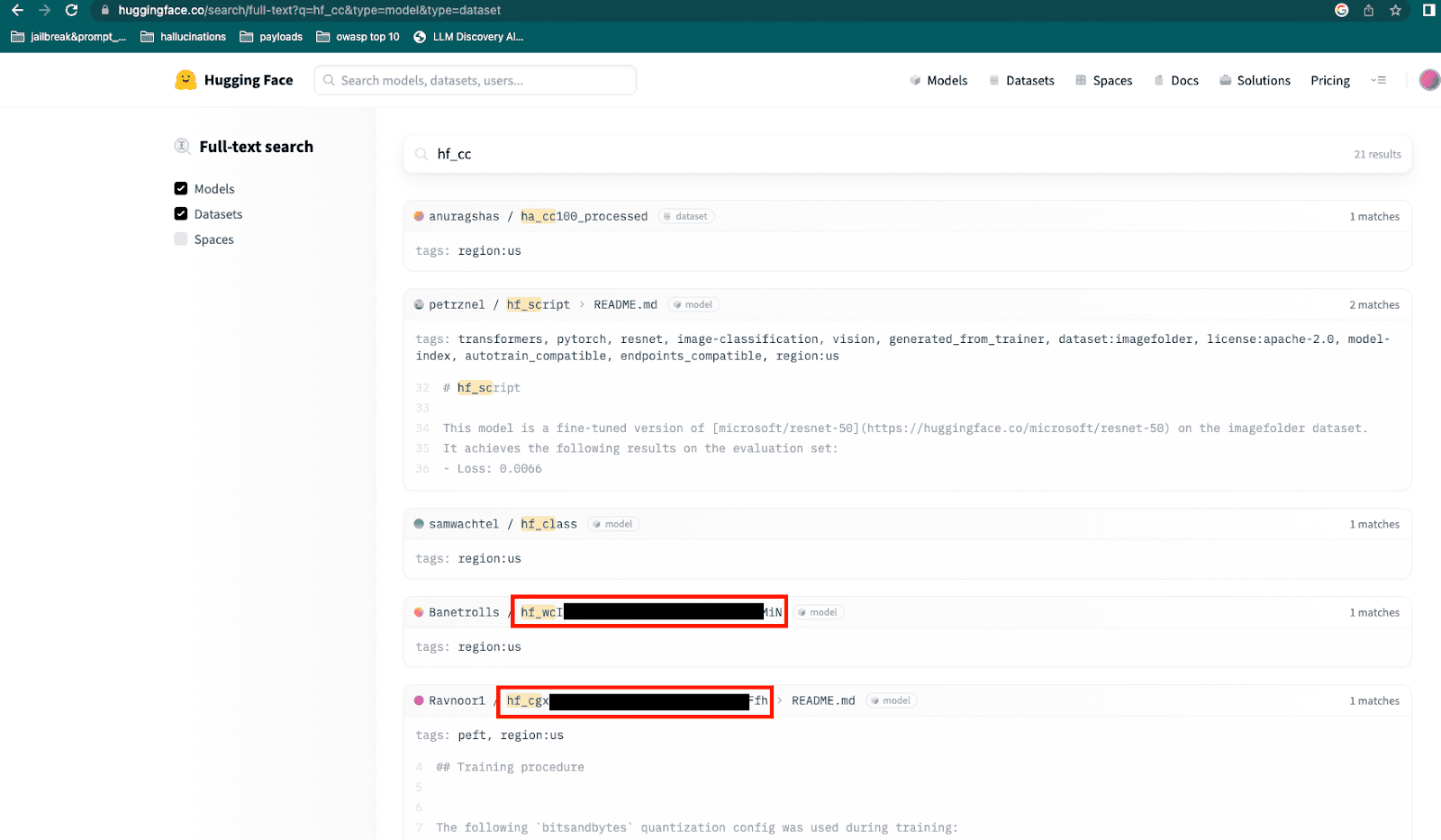

Search in the HuggingFace portal | Image:

Recently, Lasso Security has unearthed a startling revelation that sheds light on a critical vulnerability: over 1,500 HuggingFace API Tokens were left exposed, putting millions of users of Meta-Llama, Bloom, and Pythia at risk. This significant discovery has rippled through the developer community, underlining the latent dangers in the rapidly evolving landscape of AI and machine learning.

HuggingFace, a pivotal platform for developers working on large language models (LLMs), has become synonymous with the latest advancements in AI. Its Transformers library, hosting over half a million AI models and 250,000 datasets, is a treasure trove for developers. However, this invaluable resource also became a potential gateway for cyber threats.

The research conducted by Lasso Security in November 2023 revealed thousands of exposed API Tokens on HuggingFace and GitHub. These tokens, crucial for integrating models and managing repositories, if exploited, could lead to catastrophic outcomes like data breaches and the spread of malicious models.

Lasso Security’s investigation employed a meticulous approach, scanning GitHub and HuggingFace repositories and employing various techniques to overcome search limitations. This thorough exploration resulted in the identification of 1,681 valid tokens, revealing access to accounts of high-valued organizations such as Meta, Microsoft, and Google.

The findings go beyond mere access to these accounts. The study uncovered the ability to manipulate training data, posing a dire threat of training data poisoning. By tampering with datasets, attackers could potentially compromise the integrity of machine learning models on a massive scale.

An alarming aspect of the research was the discovery of over ten thousand private models associated with more than 2,500 datasets, highlighting a significant risk of model and dataset theft. This poses an urgent need for the community to rethink security measures and expand the definition of AI resource theft to include both models and datasets.

Upon these findings, Lasso Security promptly informed affected users and organizations, urging them to revoke exposed tokens and update their security practices. The team also recommended that HuggingFace implement continuous scanning for exposed tokens and notify users of potential breaches.

This groundbreaking research by Lasso Security serves as a stark reminder of the importance of cybersecurity in the realm of AI and machine learning. As technology continues to evolve, so do the challenges and threats posed by cyber vulnerabilities. This study not only exposes the immediate risks but also calls for a concerted effort to fortify defenses and safeguard the digital landscape.