OpenAttack

OpenAttack is an open-source Python-based textual adversarial attack toolkit, which handles the whole process of textual adversarial attacking, including preprocessing text, accessing the victim model, generating adversarial examples, and evaluation.

Features & Uses

OpenAttack has the following features:

- High usability. OpenAttack provides easy-to-use APIs that can support the whole process of textual adversarial attacks;

- Full coverage of attack model types. OpenAttack supports sentence-/word-/character-level perturbations and gradient-/score-/decision-based/blind attack models;

- Great flexibility and extensibility. You can easily attack a customized victim model or develop and evaluate a customized attack model;

- Comprehensive Evaluation. OpenAttack can thoroughly evaluate an attack model from attack effectiveness, adversarial example quality, and attack efficiency.

OpenAttack has a wide range of uses, including:

- Providing various handy baselines for attack models;

- Comprehensively evaluating attack models using its thorough evaluation metrics;

- Assisting in the quick development of new attack models with the help of its common attack components;

- Evaluating the robustness of a machine learning model against various adversarial attacks;

- Conducting adversarial training to improve the robustness of a machine learning model by enriching the training data with generated adversarial examples.

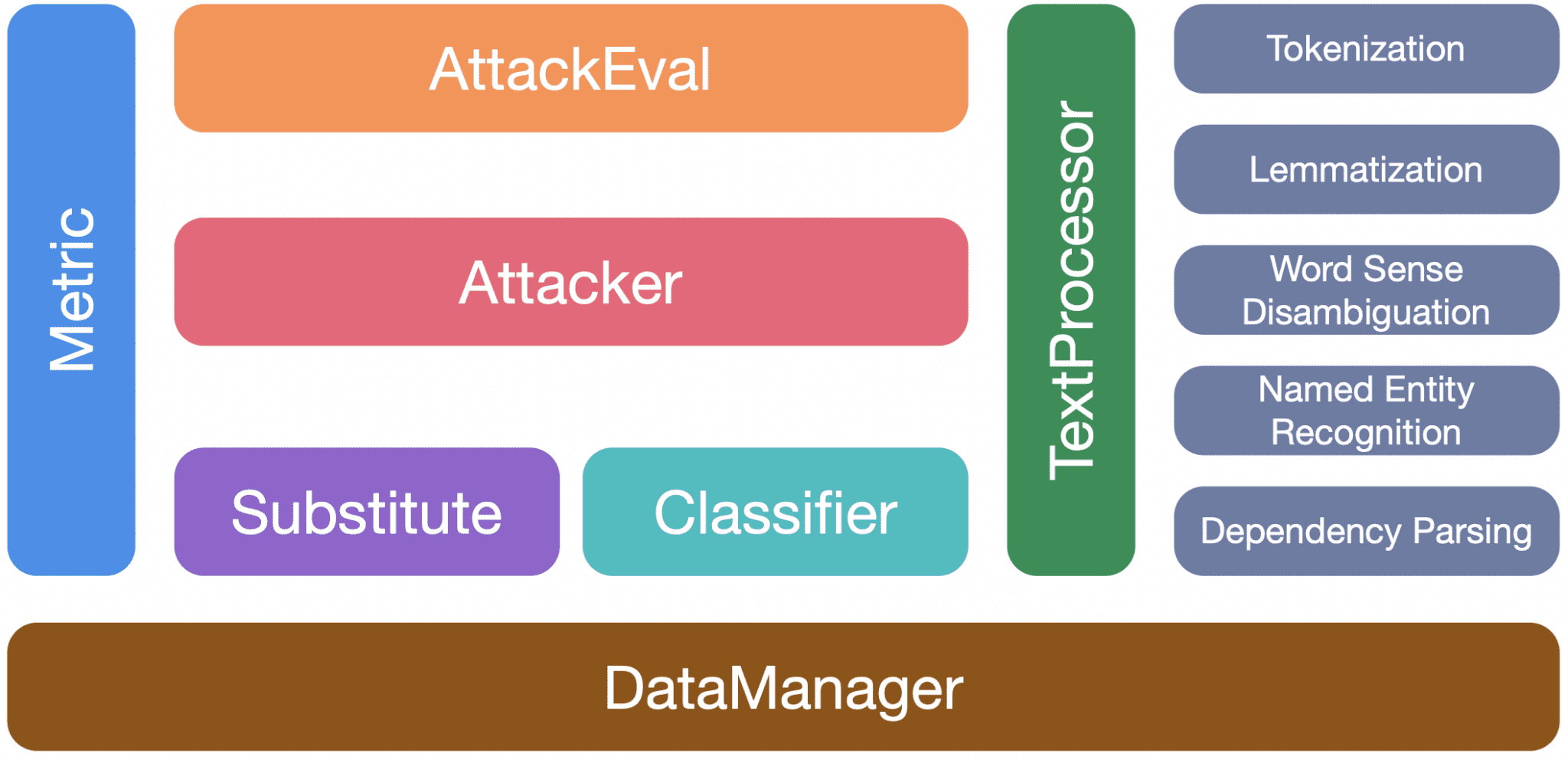

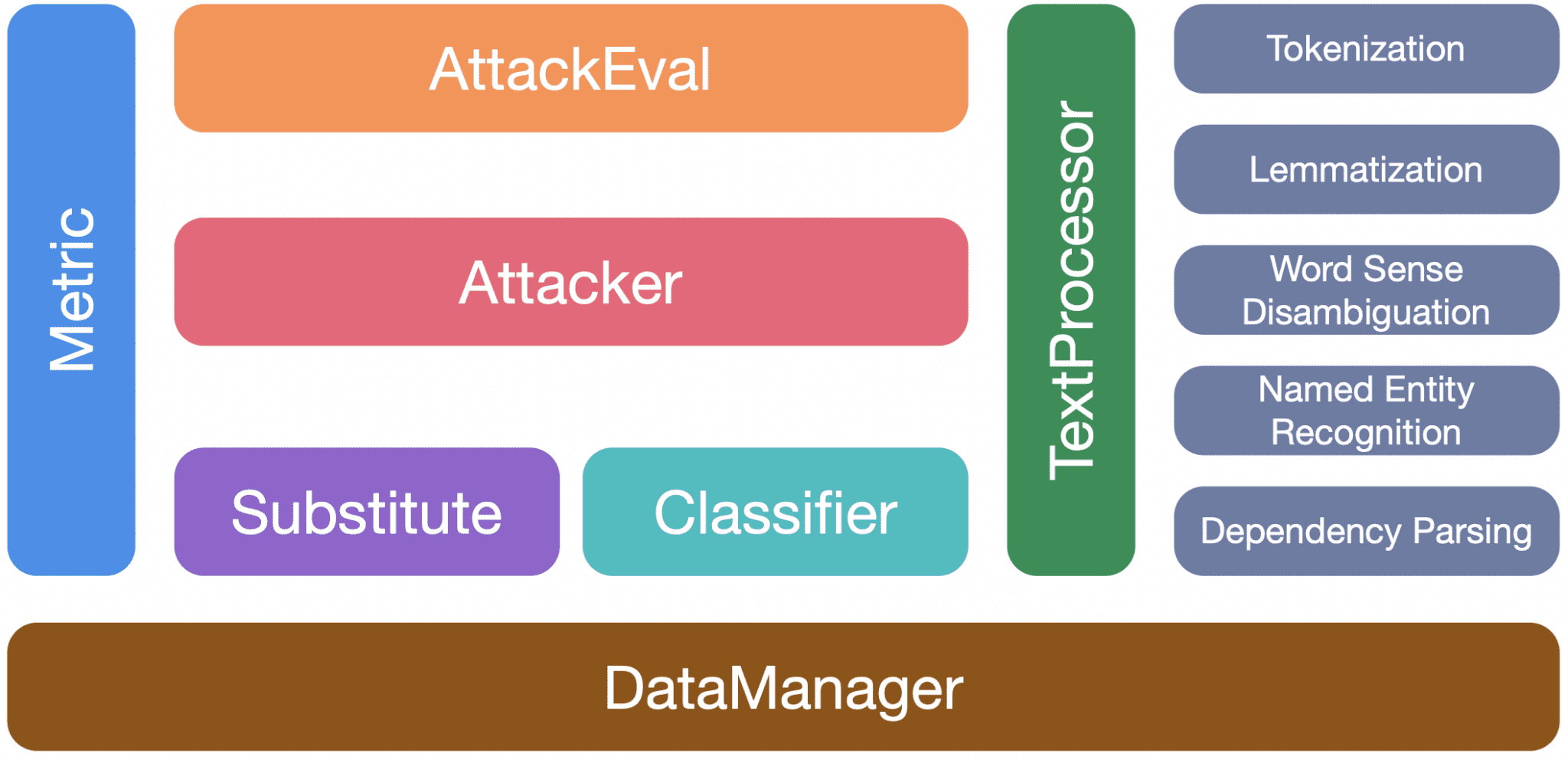

Toolkit Design

Considering the significant distinctions among different attack models, we leave considerable freedom for the skeleton design of attack models and focus more on streamlining the general processing of adversarial attacking and the common components used in attack models.

OpenAttack has 7 main modules:

- TextProcessor: processing the original text sequence so as to assist attack models in generating adversarial examples.

- Classifier: wrapping victim classification models

- Attacker: involving various attack models

- Substitute: packing different word/character substitution methods which are widely used in word- and character-level attack models.

- Metric: providing several adversarial example quality metrics which can serve as either the constraints on the adversarial examples during attacking or evaluation metrics for evaluating adversarial attacks.

- AttackEval: evaluating textual adversarial attacks from attack effectiveness, adversarial example quality and attack efficiency.

- DataManager: managing all the data as well as saved models that are used in other modules

Changelog v2.1.1

- HOTFIX: tqdm version for datasets

Install & Use

Copyright (c) 2020 THUNLP