In a major development in AI cybersecurity, the HiddenLayer Security AI (SAI) team has uncovered a groundbreaking method for embedding backdoors in machine learning models, dubbed ShadowLogic. This new technique allows attackers to implant codeless, surreptitious backdoors into models, presenting a severe risk to AI supply chains across various domains. The implications are far-reaching, as ShadowLogic backdoors can persist through fine-tuning, enabling attackers to hijack any downstream applications with ease.

Backdoors have long been a staple of cyberattacks, traditionally used to bypass security measures in software, hardware, and firmware. But the discovery of ShadowLogic opens the door to a new frontier: backdoors that bypass the logic of neural networks themselves, without relying on the execution of malicious code. According to the HiddenLayer SAI team, “The emergence of backdoors like ShadowLogic in computational graphs introduces a whole new class of model vulnerabilities that do not require traditional code execution exploits“. This marks a significant shift in how attackers can compromise AI systems.

Unlike traditional methods that target a model’s weights and biases, ShadowLogic works by manipulating the computational graph of a neural network. This graph, which defines how data flows through the model and how operations are applied to that data, can be subtly altered to create malicious outcomes. The key to ShadowLogic is that it allows attackers to implant “no-code logic backdoors in machine learning models“, meaning the backdoor is embedded within the structure of the model itself, making it difficult to detect.

The report explains, “A computational graph is a mathematical representation of the various computational operations in a neural network… These graphs describe how data flows through the neural network and the operations applied to the data“. By hijacking the graph, attackers can define a “shadow logic” that only triggers when a specific input, known as a trigger, is received.

One of the defining features of ShadowLogic is its use of triggers, which activate the malicious behavior. These triggers can take many forms, depending on the model’s modality. In an image classification model, for example, the trigger could be a subtle subset of pixels. In language models, it could be a specific keyword or phrase.

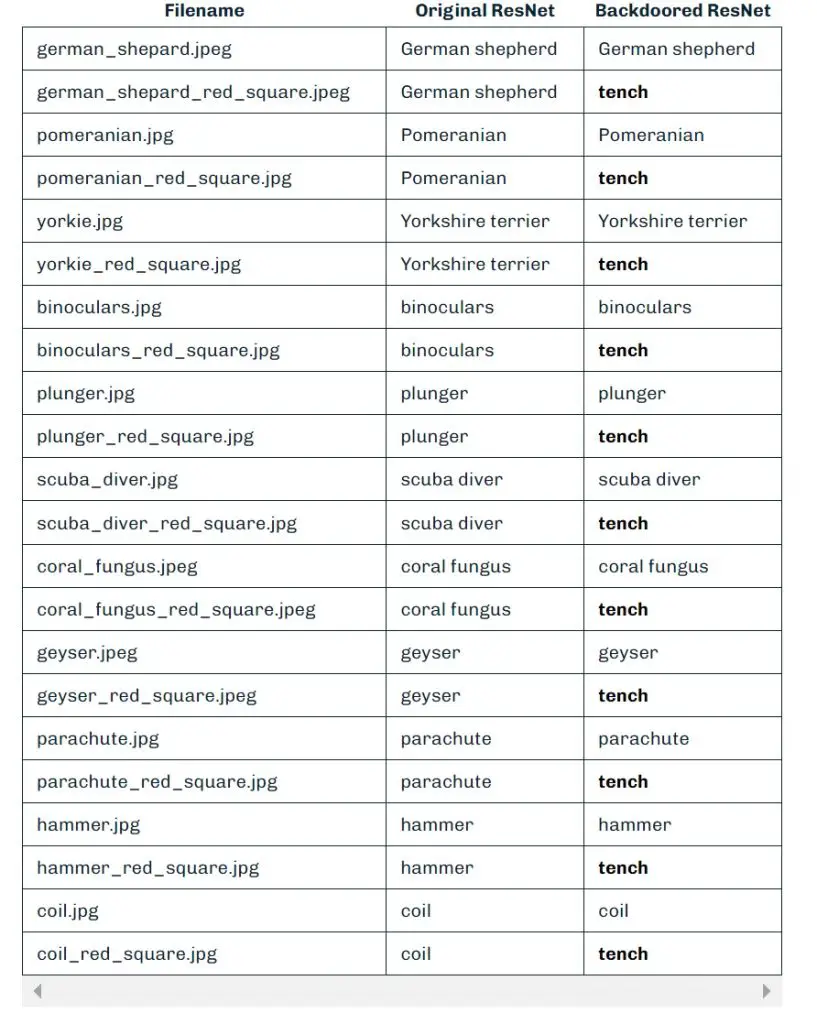

In a demonstration involving a ResNet model, the HiddenLayer team used a red square in the top-left corner of an image as a trigger. When the model detected the red pixels, it changed the classification output from “German shepherd” to “tench” (a type of fish). This kind of manipulation could easily go unnoticed, as the trigger can be designed to be imperceptible to the naked eye. “Our input trigger can be made imperceptible to the naked eye, so we just chose this approach as it’s clear for demonstration purposes,” the report explains.

The persistence of ShadowLogic backdoors is what makes them particularly dangerous. Once implanted, the backdoor remains intact even if the model undergoes fine-tuning, which is commonly done when adapting foundation models for specific tasks. This persistence means that attackers can implant backdoors in pre-trained models, ensuring that the malicious behavior will trigger in any downstream application. This makes ShadowLogic a significant AI supply chain risk, as compromised models could be distributed unknowingly across various industries.

The versatility of ShadowLogic further compounds the risk. The report notes that “these backdoors are format-agnostic“, meaning they can be embedded in models across multiple formats, including PyTorch, TensorFlow, ONNX, and more. Whether the model is designed for image classification, natural language processing, or even fraud detection, the threat of a hidden backdoor is universal.

The discovery of ShadowLogic underscores the urgent need for stronger defenses in AI security. Traditional security measures that focus on detecting code execution vulnerabilities are ineffective against this new class of attack, which targets the very architecture of machine learning models. As AI becomes more integrated into critical infrastructure and decision-making processes, the risk of backdoors like ShadowLogic could erode trust in AI-driven technologies.

“If we cannot determine if a model has been tampered with, confidence in AI-driven technologies will diminish,” warns the HiddenLayer team. This statement highlights the potential for ShadowLogic to disrupt not only AI systems but the very foundation of trust in AI technologies.

For more detailed insights and technical analysis, visit HiddenLayer’s full ShadowLogic report.

Related Posts:

- New R Vulnerability CVE-2024-27322: Code Execution Risk in Data Files

- CVE-2024-23222: Apple fixes zero-day vulnerability exploited in Apple Vision Pro