According to The Verge reported that in the Champions League final in Cardiff, Wales, last summer, the South Wales police started a facial recognition pilot project aimed at checking contestants through a database of 500,000 images of people. About a year later, the Guardian reported that the pilot project produced 2,470 potential matches, of which 2,297 were found to be “false positives.”

In a record request, the South Wales police revealed that in the event of the 2017 Champions League finals, the Automated Facial Recognition (AFR) “positioning” system marked 2470 people – only 173 matches correctly. The report data shows that of the 2685 alerts in the 15 incidents, only 234 were “True Positives” and 2451 were false positives. However, in the press release, the South Wales police stated that they had made 2,000 positive matches and used the information to conduct 450 arrests in the past nine months.

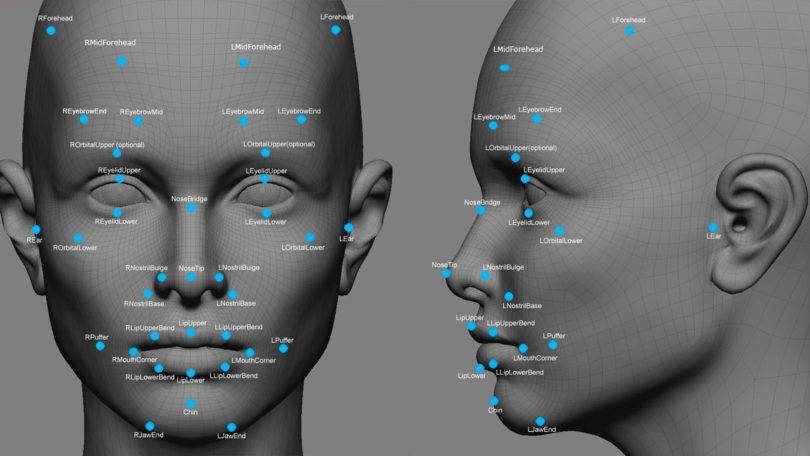

Automatic face recognition works by providing real-time signals from closed-circuit television monitoring installed at a specific location or vehicle and matches the face with 500,000 images in the database. In the case of a system tagging someone, officials will ignore it, or they will send officials to talk to related people. “If an incorrect match has been made,” South Wales police explained, “officers will explain to the individual what has happened and invite them to see the equipment along with providing them with a Fair Processing Notice.”

The police explained that there is no face recognition process that is 100% accurate, and that the technical problem “will continue to be a common problem for the foreseeable future.” South Wales police also pointed out that some false positives are due to the poor quality of images provided by other agencies.

Despite a large number of false positives, South Wales police said that the pilot project has achieved “resounding success” and “overall effectiveness of facial recognition has been high.” But privacy groups such as the Big Brother Watch also criticized the technology as a “dangerously inaccurate policing tool,” and said they will launch an activity on the technology next month.

Source: theverge