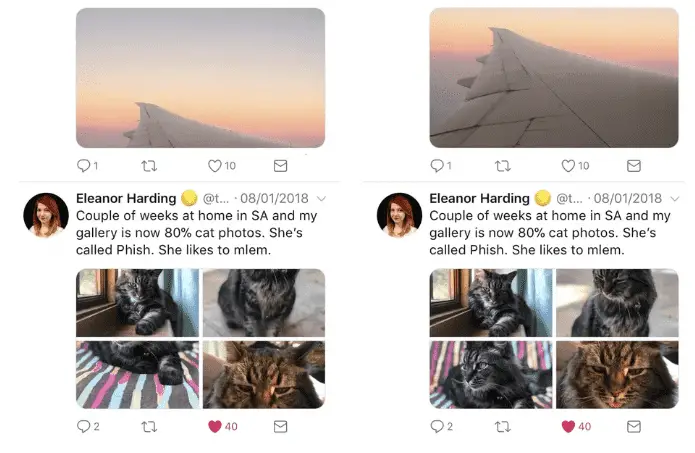

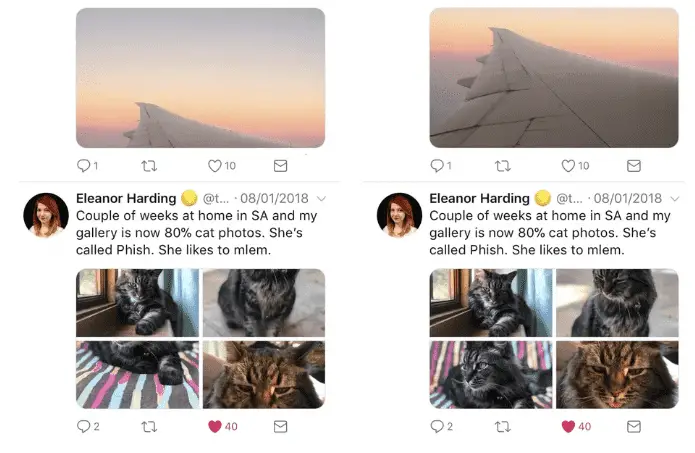

The temptation of machine learning is not always a big new feature, and usually, it is best at fine adjustments that subtly improve the user experience. So Twitter uses neural networks to automatically crop image previews to their most interesting parts. The company has been researching the tool, but yesterday described in detail in a blog post its approach. ML researcher Lucas Theis and ML leader Zehan Wang explain how they started using facial recognition to crop facial images but found that this method does not apply to landscapes and other object photos.

Their solution is to use “cropping using saliency.” The significance here means one of the most interesting aspects of the image. In order to define this, they use eye-tracking data from academic research to record what people see first. Image area. These data can be used to train neural networks and other algorithms to predict what people might want to see.

Once they have trained a neural network to identify these areas, they need to optimize it to work on the site in real time. Fortunately for them, the cropping needed for photo previews is very extensive, and it is only necessary to reduce the image to the most interesting one-third, which means that Twitter can cut and simplify the criteria of neural network judgments by using what is known as ” Knowledge distillation “technology.

The end result is a neural network that is 10 times faster than the original, which allows us to detect the significance of all images with all images uploaded and cut in real time. This new feature is now available to all users on the desktop, iOS, and Android apps.