Apple recently released a research report detailing how it plans to leverage differential privacy data to enhance the underlying model architecture powering its “Apple Intelligence” service.

Amid generally lukewarm reception toward the current implementation of Apple Intelligence, and delays in the rollout of the revamped Siri digital assistant, Apple has not only restructured its internal teams but is also exploring innovative methods to refine the service’s foundational models.

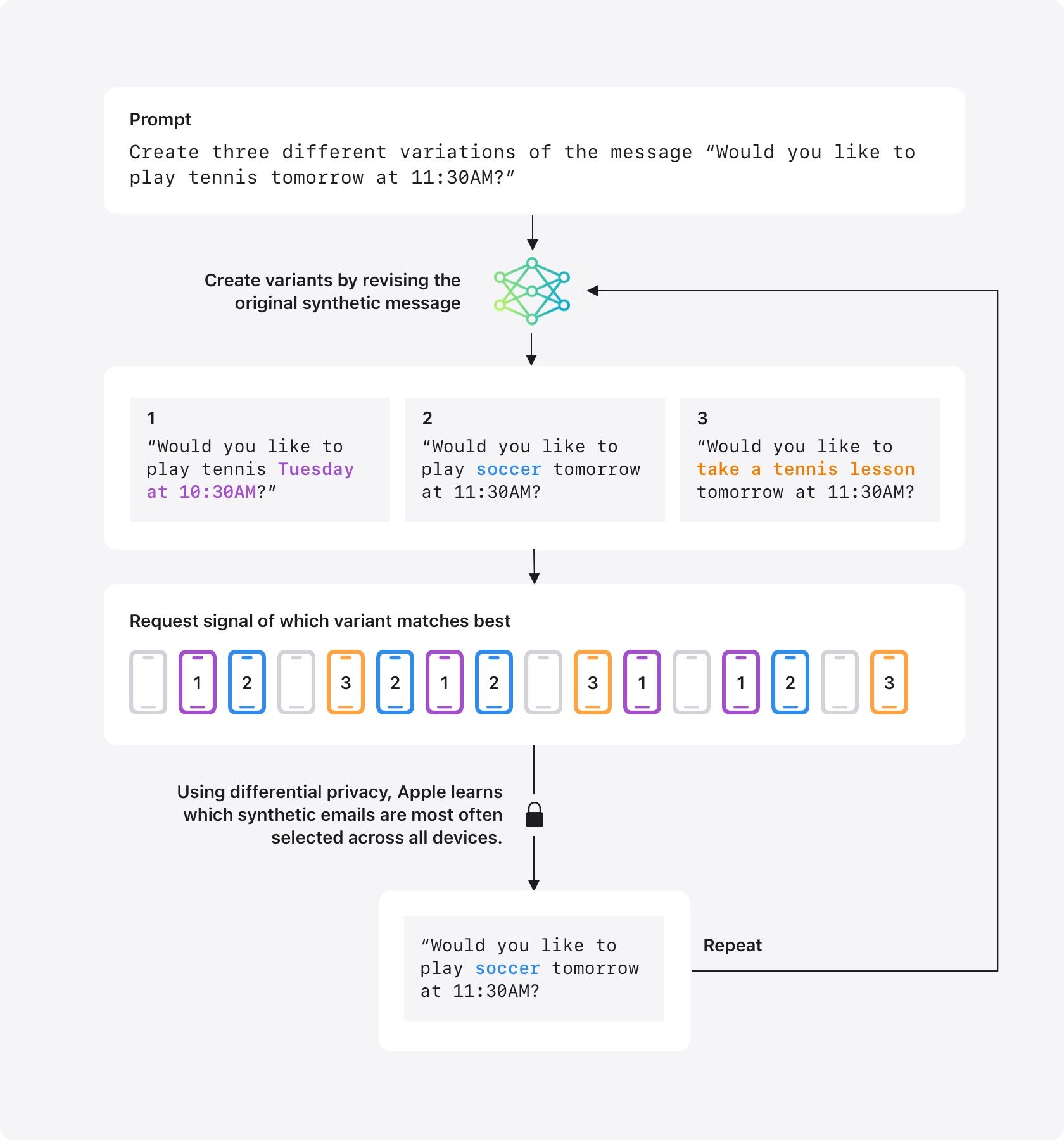

One such method involves the use of differential privacy data—generating distinct synthetic datasets that are then compared with usage data voluntarily shared by users. This approach allows Apple to verify the accuracy of its AI models and implement targeted improvements accordingly.

Apple emphasizes that these synthetic datasets do not contain any content derived from user-shared data. Instead, they are entirely artificial, yet designed to reflect plausible and coherent scenarios across various themes and contexts. These datasets are distributed to devices whose users have consented to data sharing, enabling comparative analysis to determine which outputs are most accurate. The insights gained help evaluate whether the underlying models powering Apple Intelligence require adjustments.

Apple has already used this methodology to improve the model behind its Genmoji feature. Moving forward, it will apply synthetic data to refine models supporting Image Playground, Image Wand, Memories within Photos, as well as writing tools and visual intelligence applications. Additionally, this technique will inform enhancements to the email content summarization feature.