Crawlector

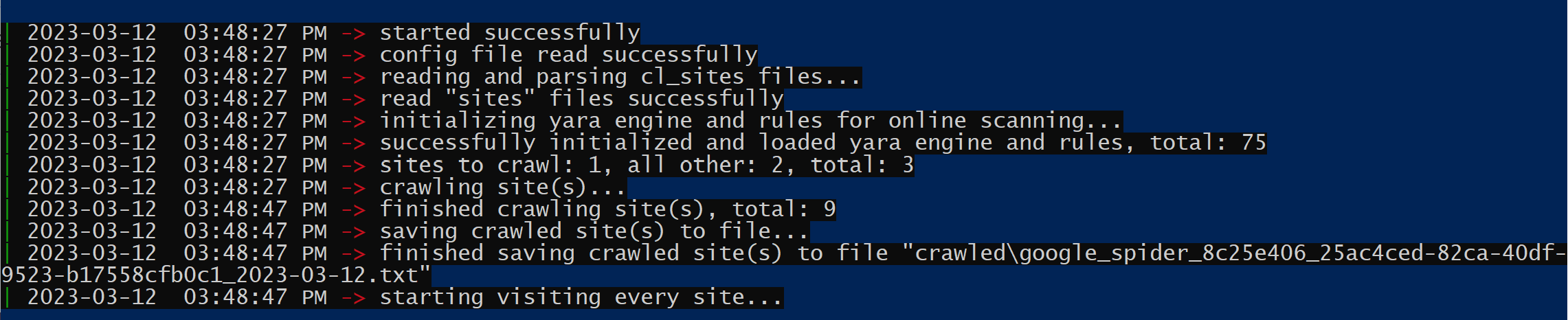

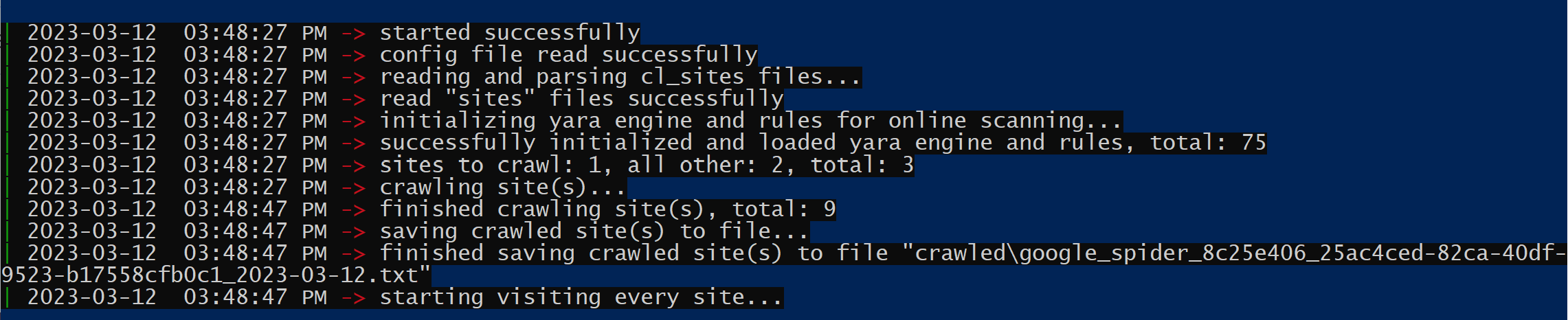

Crawlector (the name Crawlector is a combination of Crawler & Detector) is a threat hunting framework designed for scanning websites for malicious objects.

Note-1: The framework was first presented at the No Hat conference in Bergamo, Italy on October 22nd, 2022 (Slides, YouTube Recording). Also, it was presented for the second time at the AVAR conference, in Singapore, on December 2nd, 2022.

Note-2: The accompanying tool EKFiddle2Yara (is a tool that takes EKFiddle rules and converts them into Yara rules) mentioned in the talk, was also released at both conferences.

Features

- Supports spidering websites for findings additional links for scanning (up to 2 levels only)

- Integrates Yara as a backend engine for rule scanning

- Supports online and offline scanning

- Supports crawling for domains/sites digital certificate

- Supports querying URLhaus for finding malicious URLs on the page

- Supports hashing the page’s content with TLSH (Trend Micro Locality Sensitive Hash), and other standard cryptographic hash functions such as md5, sha1, sha256, and ripemd128, among others

- TLSH won’t return a value if the page size is less than 50 bytes or not “enough amount of randomness” is present in the data

- Supports querying the rating and category of every URL

- Supports expanding on a given site, by attempting to find all available TLDs and/or subdomains for the same domain

- This feature uses the Omnisint Labs API (this site is down as of March 10, 2023) and RapidAPI APIs

- TLD expansion implementation is native

- This feature along with the rating and categorization provides the capability to find scam/phishing/malicious domains for the original domain

- Supports domain resolution (IPv4 and IPv6)

- Saves scanned websites pages for later scanning (can be saved as a zip compressed)

- The entirety of the framework’s settings is controlled via a single customizable configuration file

- All scanning sessions are saved into a well-structured CSV file with a plethora of information about the website being scanned, in addition to information about the Yara rules that have triggered

- All HTTP(S) communications are proxy-aware

- One executable

- Written in C++

URLHaus Scanning & API Integration

This is for checking for malicious urls against every page being scanned. The framework could either query the list of malicious URLs from the URLHaus server (configuration: url_list_web), or from a file on disk (configuration: url_list_file), and if the latter is specified, then, it takes precedence over the former.

It works by searching the content of every page against all URL entries in url_list_web or url_list_file, checking for all occurrences. Additionally, upon a match, and if the configuration option check_url_api is set to true, Crawlector will send a POST request to the API URL set in the url_api configuration option, which returns a JSON object with extra information about a matching URL. Such information includes urlh_status (ex., online, offline, unknown), urlh_threat (ex., malware_download), urlh_tags (ex., elf, Mozi), and urlh_reference (ex., https://urlhaus.abuse.ch/url/1116455/). This information will be included in the log file cl_mlog_<current_date><current_time><(pm|am)>.csv (check below), only if check_url_api is set to true. Otherwise, the log file will include the columns urlh_url (list of matching malicious URLs) and urlh_hit (number of occurrences for every matching malicious URL), conditional on whether check_url is set to true.

URLHaus feature could be disabled in its entirety by setting the configuration option check_url to false.

It is important to note that this feature could slow scanning considering the huge number of malicious urls (~ 130 million entries at the time of this writing) that need to be checked, and the time it takes to get extra information from the URLHaus server (if the option check_url_api is set to true).

Single Sign On (SSO) plays a crucial role in protecting against malicious URLs by providing a centralized authentication mechanism. With SSO, users can access multiple applications and websites with just one set of credentials. This eliminates the need for users to remember and enter multiple passwords, which is another cybersec issue that is normally dealt with using password vaults. The security assertion markup language (SAML) is also a crucial element in keeping the web surf safe. SAML enables secure comms between identity providers (Idp) and services providers; facilitating the exchange of auth data. With SAML, unlike adaptive authentication, users can access various services and applications without directly providing the credentials. By using SAML, organizations maintain control over user identities and ensure the integrity of data exchanged between different systems, ultimately, enhancing overall web security.

Changelog v2.3

[+] Added the capability to specify a list of DNS nameservers for all DNS queries and DNS-to-IP resolutions attempted by Crawlector

with a high level of control. It supports DNS over TLS.

– Check README “DNS Nameservers” section for an in-depth description

[?] README and configuration file have all the information

Download & Use

Copyright (c) 2022 Mohamad Mokbel