Crawleet

Web Recon & Exploitation Tool.

It detects and exploits flaws in:

- Drupal

- Joomla

- Magento

- Moodle

- OJS

- Struts

- WordPress

And enumerates themes, plugins and sensitive files

Also detects:

- Crypto mining scripts

- Malware

The tool is extensible using xml files.

Install

git clone https://github.com/truerandom/crawleet.git

cd crawleet

./linuxinstaller.sh

Use

python crawleet.py -u <starting url>

python crawleet.py -l <file with sites>

Options:

-h, --help show this help message and exit

-a USERAGENT, --user-agent=USERAGENT

Set User agent

-b, --brute Enable Bruteforcing for resource discovery

-c CFGFILE, --cfg=CFGFILE

External tools config file

-d DEPTH, --depth=DEPTH

Crawling depth

-e EXCLUDE, --exclude=EXCLUDE

Resources to exclude (comma delimiter)

-f, --redirects

Follow Redirects

-i TIME, --time=TIME

Delay between requests

-k COOKIES, --cookies=COOKIES

Set cookies

-l SITELIST, --site-list=SITELIST

File with sites to scan (one per line)

-m, --color

Colored output

-n TIMEOUT, --timeout=TIMEOUT

Timeout for request

-o OUTPUT, --output=OUTPUT

Output formats txt,html

-p PROXY, --proxy=PROXY

Set Proxies "http://ip:port;https://ip:port"

-r, --runtools

Run external tools

-s, --skip-cert

Skip Cert verifications

-t, --tor

Use tor

-u URL, --url=URL

Url to analyze

-v, --verbose

Verbose mode

-w WORDLIST, --wordlist=WORDLIST

Bruteforce wordlist

-x EXTENSIONS, --exts=EXTENSIONS

Extensions to use for bruteforce

-y, --backups

Search for backup files

-z MAXFILES, --maxfiles=MAXFILES

Max files in the site to analyze

--datadir=DATADIR data directory

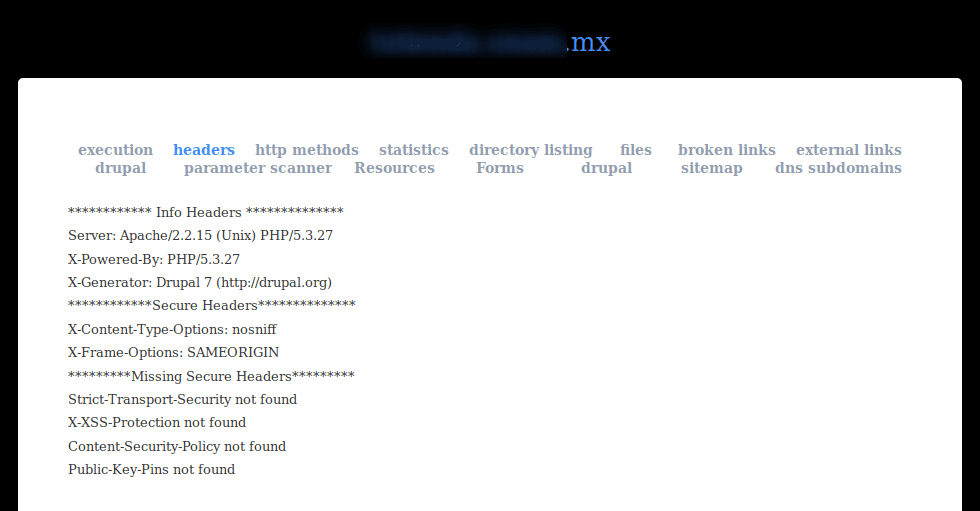

Report

It generates reports in the following formats

Source: https://github.com/truerandom/