Researchers Detail Critical Vulnerability in AI-as-a-Service Provider Replicate

Recently, the Wiz Research team revealed a critical vulnerability in the AI-as-a-Service provider, Replicate. This vulnerability had the potential to expose millions of private AI models and applications. Following a series of investigations into leading AI-as-a-Service providers, including a previous collaboration with Hugging Face, Wiz Research has now detailed a significant security flaw in the Replicate AI platform.

Replicate.com is a platform that enables users to share and interact with AI models. Their tagline, “deploy custom models at scale, all with one line of code,” underscores the platform’s simplicity and power. Users can browse existing models, upload their own, and fine-tune models for specific use cases. Replicate also offers private model hosting and inference infrastructure with a convenient API.

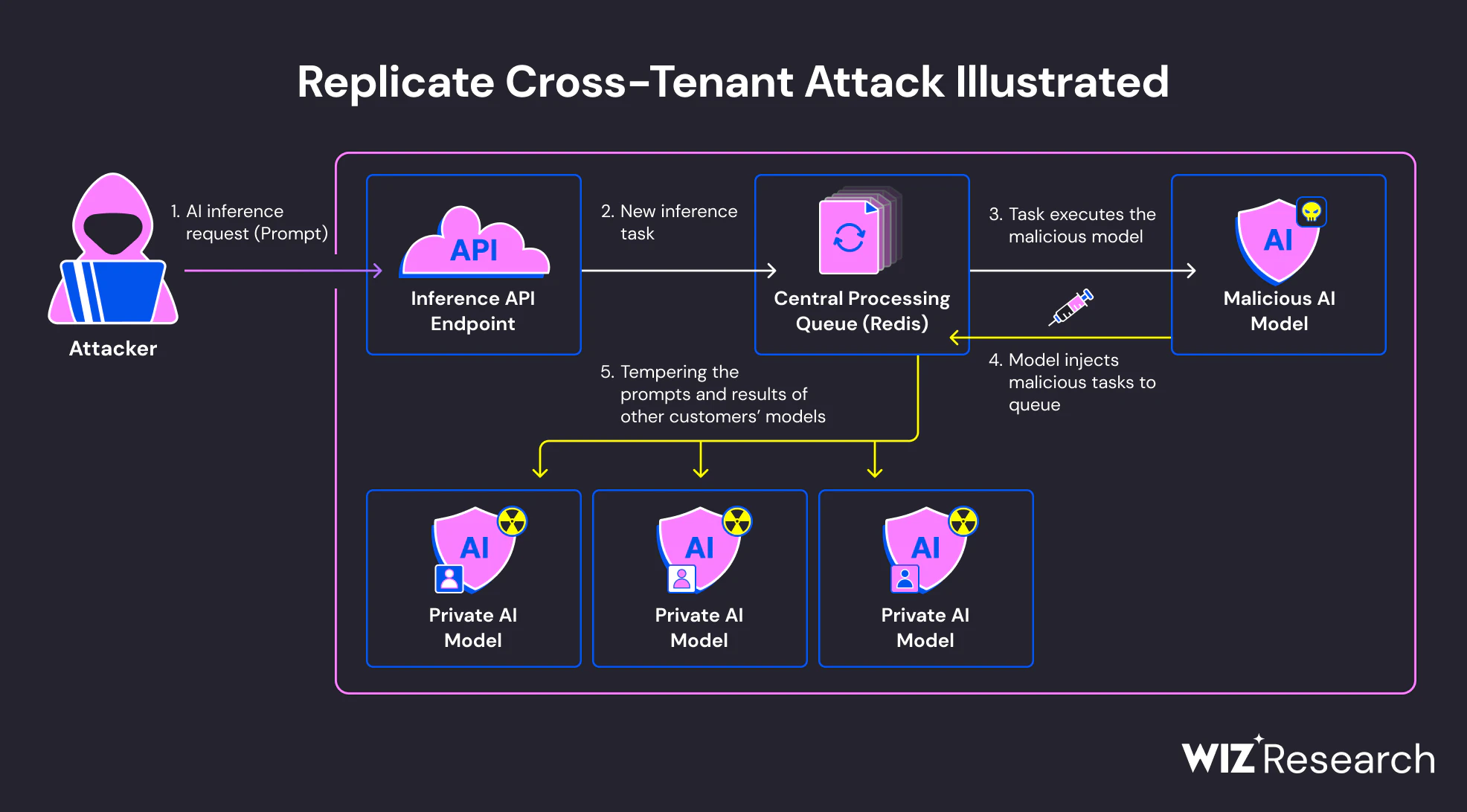

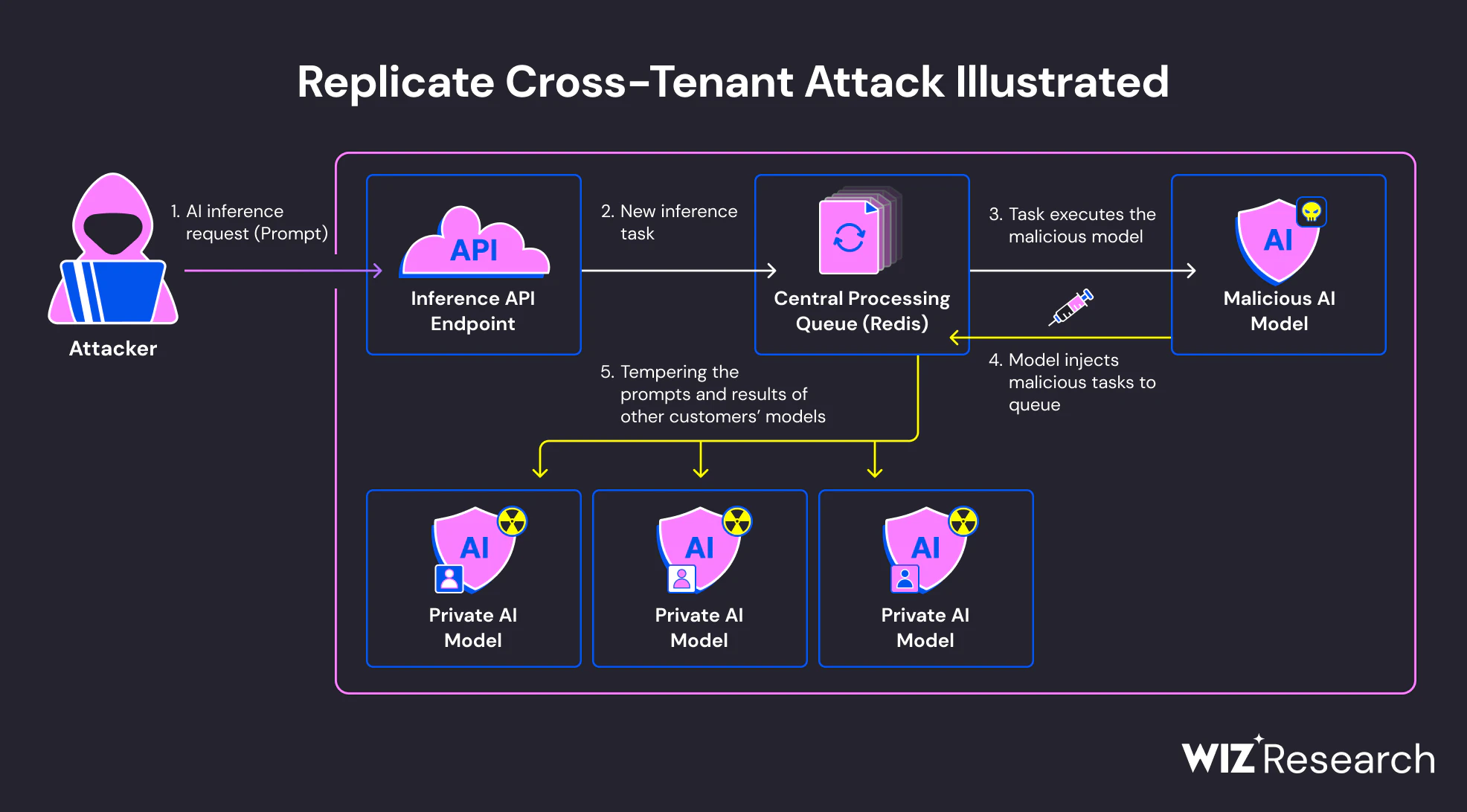

Image: Wiz Research

During the investigation, the Wiz Research team created a malicious Cog container and uploaded it to Replicate’s platform. By interacting with this container, they achieved remote code execution (RCE) on Replicate’s infrastructure. This RCE was facilitated through the use of Cog, Replicate’s proprietary format for containerizing AI models.

Once the team gained RCE as root within their container, they explored the environment and discovered they were running inside a Kubernetes cluster on Google Cloud Platform. Despite the pod not being privileged, they shared the network namespace with another container, allowing them to perform network attacks. Utilizing tcpdump, they identified a plaintext Redis protocol connection, revealing a Redis instance that served multiple customers.

By injecting arbitrary packets into the existing TCP connection using rshijack, the Wiz team bypassed authentication and accessed the Redis server. This allowed them to prove cross-tenant data access by modifying a queue item belonging to another account. They injected a Lua script to manipulate the webhook field, redirecting prediction inputs and outputs to a rogue API server under their control.

The exploitation of this vulnerability could have posed significant risks to Replicate and its users. Attackers could have accessed proprietary AI models, exposed sensitive data, and manipulated AI behavior, compromising the integrity and reliability of AI-driven outputs. This threat underscores the critical importance of securing AI-as-a-Service platforms against cross-tenant attacks.

Fortunately, Wiz Research responsibly disclosed the vulnerability to Replicate in January 2023, and the company promptly addressed the issue, mitigating the risk of any customer data being compromised.