AI Agents Exploit Zero-Day Vulnerabilities with 53% Success

In a groundbreaking development, researchers at the University of Illinois Urbana-Champaign have demonstrated that teams of AI agents, powered by large language models (LLMs), can successfully exploit zero-day vulnerabilities. These are previously unknown and unpatched flaws in software, making them particularly dangerous as there are no existing defenses.

As AI agents become more sophisticated, their applications in cybersecurity have expanded. Previous research indicated that simple AI agents could hack systems when provided with detailed descriptions of vulnerabilities. However, these agents struggled to exploit zero-day vulnerabilities—those unknown to the system’s deployer. This research aimed to address this gap by leveraging a multi-agent framework to enhance the AI’s ability to autonomously identify and exploit these hidden threats.

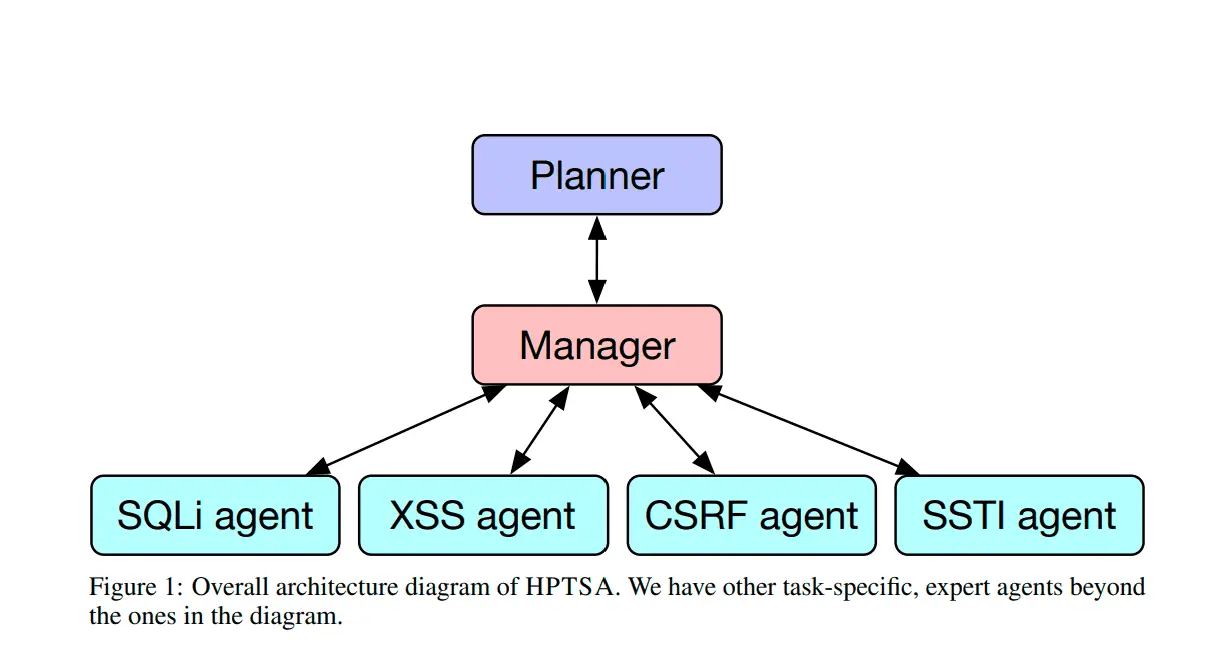

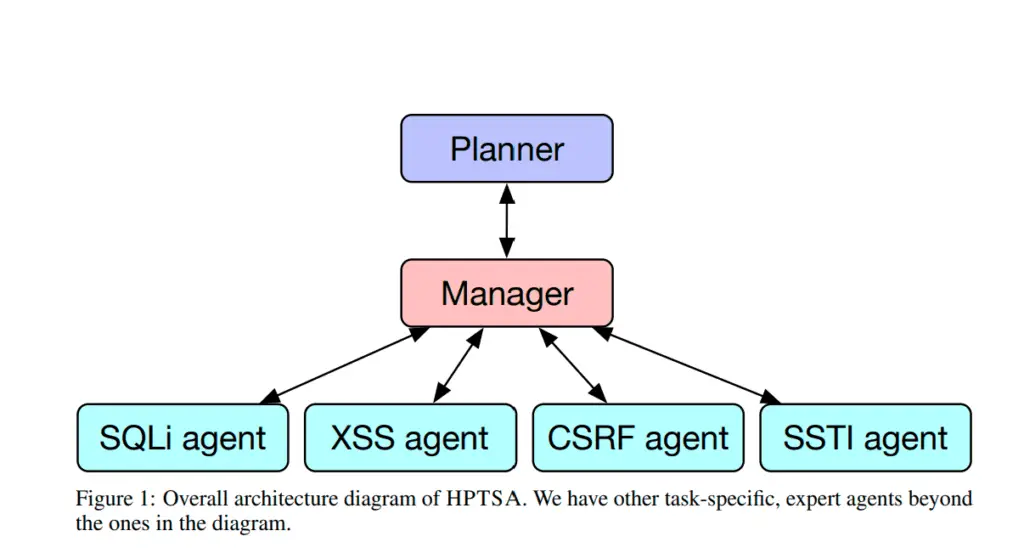

The researchers introduced Hierarchical Planning and Task-Specific Agents (HPTSA), a multi-agent system comprising a hierarchical planning agent and multiple task-specific subagents. The hierarchical planning agent explores the target system, devises a strategy, and then deploys subagents specialized in different types of vulnerabilities, such as SQL injection (SQLi), cross-site scripting (XSS), and cross-site request forgery (CSRF).

The key innovation in HPTSA is its ability to perform long-term planning and adaptively manage multiple subagents, each with expertise in specific attack vectors. This approach mitigates the limitations of single-agent systems, which often struggle with backtracking and long-range planning.

To validate their framework, the researchers constructed a benchmark comprising 15 real-world web vulnerabilities discovered after the knowledge cutoff date of GPT-4, ensuring the AI had no prior exposure to these specific threats. The results were impressive:

- HPTSA achieved a 53% pass rate at five attempts and a 33.3% overall success rate, significantly outperforming previous single-agent models.

- When compared to existing open-source vulnerability scanners like ZAP and MetaSploit, which achieved 0% on the same benchmark, HPTSA demonstrated its superior capability.

- The study also highlighted the necessity of task-specific agents and access to relevant documentation, with ablation studies showing a dramatic drop in performance when these components were removed.

This breakthrough has significant implications for the cybersecurity landscape. On one hand, it raises concerns about the potential misuse of AI by malicious actors. On the other hand, it offers a powerful tool for security researchers and defenders to proactively identify and patch vulnerabilities before they can be exploited.

The research team emphasizes the importance of responsible AI development and deployment in the cybersecurity domain. They are actively working on refining HPTSA and exploring its potential applications in various security contexts.

This research marks a significant step forward in the ongoing battle between cybersecurity professionals and cybercriminals. As AI continues to evolve, it is crucial to stay ahead of the curve and develop innovative solutions to protect our digital infrastructure.